Search

Migrate Docker Containers from VM to NFS Share?

Hello everybody, happy Monday.

I'm hoping to get a little help with my most recent self-hosting project. I've created a VM on my Proxmox instance with a 32GB disk and installed Ubuntu, Docker, and CosmOS to it. Currently I have Gitea, Home Assistant, NextCloud, and Jellyfin installed via CosmOS.

If I want to add more services to Cosmos, then I need to be able to move the containers from the VM's 32GB disk into an NFS Share mounted on the VM which has something like 40TB of storage at the moment. My hope is that moving these Containers will allow them to grow on their own terms while leaving the OS disk the same size.

Would some kind of link allow me to move the files to the NFS share while making them still appear in their current locations in the host OS (Ubuntu 24.04). I'm not concerned about the NFS share not being available, it runs on the same server virtualizing everything else and it's configured to start before everything else so the share should be up and running by the time the server is in any situation. If anyone can see an obvious problem with that premise though, I'd love to hear about it.

How to change qBittorrent admin password in docker-container?

I'm currently trying to spin up a new server stack including qBittorrent. when I launch the web UI, it asks for a login on first launch. According to the documentation, the default user id admin and the default password is adminadmin.

Solved:

For qBittorrent ≥ v4.1, a randomly generated password is created at startup on the initial run of the program. After starting the container, enter the following into a terminal:

docker logs qbittorrent or sudo docker logs qbittorrent (if you do not have access to the container)

The command should return:

******** Information ******** To control qBittorrent, access the WebUI at: http://localhost:5080 The WebUI administrator username is: admin The WebUI administrator password was not set. A temporary password is provided for this session: G9yw3qSby You should set your own password in program preferences.

Use this password to login for this session. Then create a new password by opening http://{localhost}:5080 and navigate the menus to -> Tools -> Options -> WebUI ->Change current password. Don't forget to save.

Can't access immich on local network

I've been banging my head on this for a few days now, and I can't figure this out. When I start up immich container, I see in docker ps:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 1c496e061c5c ghcr.io/immich-app/immich-server:release "tini -- /bin/bash s…" About a minute ago Up About a minute (healthy) 2283/tcp, 0.0.0.0:2284->3001/tcp, [::]:2283->3001/tcp immich

netstat shows that port 2283 is listening, but I cannot access http://IP_ADDRESS:2283 from Windows, Linux, or Mac host. If I SSH in and run a browser back through that, I can't access it via localhost. I even tried changing the port to 2284. I can see the change in netstat and docker ps outputs, but still no luck accessing it. I also can't telnet to either port on the host. I know Immich is up because it's accessible via the swag reverse proxy (I've also tried bringing it up w/ that disabled). I don't see anything in the logs of any of the immich containers or any of the host system logs when I try to access.

All of this came about because I ran into the Cloudflare upload size limit and it seems I can't get around it for the strangest reason!

Using refurbished HDDs in my livingroom NAS

Hello all,

This is a follow-up from my previous post: Is it a good idea to purchase refurbished HDDs off Amazon ?

In this post I will give you my experience purchasing refurbished hard drives and upgrading my BTRFS RAID10 arrray by swaping all the 4 drives.

TL;DR: All 4 drives work fine. I was able to replace the drives in my array one at a time using an USB enclosure for the data transfer !

1. Purchasing & Unboxing

After reading the reply from my previous post, I ended up purchasing 4x WD Ultrastar DC HC520 12TB hard drives from Ebay (Germany). The delivery was pretty fast, I received the package within 2 days. The drive where very well packed by the seller, in a special styrofoam tray and anti-static bags !packaging

2. Sanity check

I connect the drives to a spare computer I have and spin-up an Ubuntu Live USB to run a S.MA.R.T check and read the values. SMART checks and data are available from GNOME Disks (gnome-disk-utility), if you don't want to bother with the terminal.

All the 4 disks passed the self check, I even did a complete check on 2 of them overnight and they both passed without any error.

More surprisingly, all the 4 disks report Power-ON Hours=N/A or 0. I don't think it means they are brand new, I suspect the values have been erased by the reseller.

!smart data

3. Backup everything !

I've selected one of the 12TB drives and installed it inside an external USB3 enclosure. On my PC I formatted the drive to BTRFS with one partition with the entire capacity of the disk.

I then connected the, now external, drive to the NAS and transfer the entirety of my files (excluding a couple of things I don't need for sure), using rsync:

bash rsync -av --progress --exclude 'lost+found' --exclude 'quarantine' --exclude '.snapshots' /mnt/volume1/* /media/Backup_2024-10-12.btrfs --log-file=~/rsync_backup_20241012.log

Actually, I wanted to run the command detached, so I used the at command at (not sure if this is the best method to do this, feel free to propose some alternatives):

bash echo "rsync -av --progress --exclude 'lost+found' --exclude 'quarantine' --exclude '.snapshots' /mnt/volume1/* /media/Backup_2024-10-12.btrfs --log-file=~/rsync_backup_20241012.log" | at 23:32

The total volume of the data is 7.6TiB, the transfer took 19 hours to complete.

4. Replacing the drives

My RAID10 array, a.k.a volume1 is comprise of the disks sda, sdb, sdc and sdd, all of which are 6TB drives. My NAS has only 4x SATA ports and all of them are occupied (volume2 is an SSD connected via USB3).

bash m4nas:~:% lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 1 5.5T 0 disk /mnt/volume1 sdb 8:16 1 5.5T 0 disk sdc 8:32 1 5.5T 0 disk sdd 8:48 1 5.5T 0 disk sde 8:64 0 111.8G 0 disk └─sde1 8:65 0 111.8G 0 part /mnt/volume2 sdf 8:80 0 10.9T 0 disk mmcblk2 179:0 0 58.2G 0 disk └─mmcblk2p1 179:1 0 57.6G 0 part / mmcblk2boot0 179:32 0 4M 1 disk mmcblk2boot1 179:64 0 4M 1 disk zram0 252:0 0 1.9G 0 disk [SWAP]

According to documentation I could find (btrfs replace - readthedocs.io, Btrfs, replace a disk - tnonline.net), the best course of action is definitely to use the builtin BTRFS command replace.

From there, there are 2 method I can use:

- Connect new drive, one by one, via USB3 to run

replace, then swap the disks in the drive-bay - Degraded mode, swap the disks one by one in the drive-bays and rebuild the array

Method #1 seems to me faster and safer, and I've decided to tried this one first. If it doesn't work, I can fallback to method #2 (which I had to for one of the disks !).

4.a. Replace the disks one-by-one via USB

!NAS setup with external drive

I've installed a blank 12TB disk in my USB enclosure and mounted it to the NAS. It is showing as sdf.

Now, it's time to run the replace command as described here: Btrfs, Replacing a disk, Replacing a disk in a RAID array

bash sudo btrfs replace start 1 /dev/sdf /mnt/volume1

We can see the new disk is shown as ID 0 while the replace operation takes place:

```bash m4nas:~:% btrfs filesystem show Label: 'volume1' uuid: 543e5c4f-4012-4204-bf28-1e4e651ce2e8 Total devices 4 FS bytes used 7.51TiB devid 0 size 5.46TiB used 3.77TiB path /dev/sdf devid 1 size 5.46TiB used 3.77TiB path /dev/sda devid 2 size 5.46TiB used 3.77TiB path /dev/sdb devid 3 size 5.46TiB used 3.77TiB path /dev/sdc devid 4 size 5.46TiB used 3.77TiB path /dev/sdd

Label: 'ssd1' uuid: 0b28580f-4a85-4650-a989-763c53934241 Total devices 1 FS bytes used 46.78GiB devid 1 size 111.76GiB used 111.76GiB path /dev/sde1 ```

It took around 15 hours to replace the disk. After it's done, I've got this:

```bash m4nas:~:% sudo btrfs replace status /mnt/volume1 Started on 19.Oct 12:22:03, finished on 20.Oct 03:05:48, 0 write errs, 0 uncorr. read errs m4nas:~:% btrfs filesystem show Label: 'volume1' uuid: 543e5c4f-4012-4204-bf28-1e4e651ce2e8 Total devices 4 FS bytes used 7.51TiB devid 1 size 5.46TiB used 3.77TiB path /dev/sdf devid 2 size 5.46TiB used 3.77TiB path /dev/sdb devid 3 size 5.46TiB used 3.77TiB path /dev/sdc devid 4 size 5.46TiB used 3.77TiB path /dev/sdd

Label: 'ssd1' uuid: 0b28580f-4a85-4650-a989-763c53934241 Total devices 1 FS bytes used 15.65GiB devid 1 size 111.76GiB used 111.76GiB path /dev/sde1 ```

In the end, the swap from USB to SATA worked perfectly !

```bash m4nas:~:% lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 111.8G 0 disk └─sda1 8:1 0 111.8G 0 part /mnt/volume2 sdb 8:16 1 10.9T 0 disk /mnt/volume1 sdc 8:32 1 5.5T 0 disk sdd 8:48 1 5.5T 0 disk sde 8:64 1 5.5T 0 disk mmcblk2 179:0 0 58.2G 0 disk └─mmcblk2p1 179:1 0 57.6G 0 part / mmcblk2boot0 179:32 0 4M 1 disk mmcblk2boot1 179:64 0 4M 1 disk zram0 252:0 0 1.9G 0 disk [SWAP] zram1 252:1 0 50M 0 disk /var/log m4nas:~:% btrfs filesystem show Label: 'volume1' uuid: 543e5c4f-4012-4204-bf28-1e4e651ce2e8 Total devices 4 FS bytes used 7.51TiB devid 1 size 5.46TiB used 3.77TiB path /dev/sdb devid 2 size 5.46TiB used 3.77TiB path /dev/sdc devid 3 size 5.46TiB used 3.77TiB path /dev/sdd devid 4 size 5.46TiB used 3.77TiB path /dev/sde

Label: 'ssd1' uuid: 0b28580f-4a85-4650-a989-763c53934241

Total devices 1 FS bytes used 13.36GiB

devid 1 size 111.76GiB used 89.76GiB path /dev/sda1

```

Note that I haven't expended the partition to 12TB yet, I will do this once all the disks are replace.

The replace operation has to be repeated 3 more times, taking great attention each time to select the correct disk ID (2, 3 and 4) and replacement device (e.g: /dev/sdf).

4.b. Issue with replacing disk 2

While replacing disk 2, a problem occurred. The replace operation stopped progressing, despite not reporting any errors. After waiting couple of hours and confirming it was stuck, I decided to do something reckless that cause me a great deal of troubles later: To kick-start the replace operation, I unplugged the power from the USB enclosure and plugged it back in (DO NOT DO THAT !), It seemed to work and the transfer started to progress again. But once completed, the RAID array was broken and the NAS wouldn't boot anymore. (I will only talk about the things relevant to the disk replacement and will skip all the stupid things I did to make the situation worst, it took me a good 3 days to recover and get back on track...).

I had to forget and remove from the RAID array, both the drive ID=2 (the drive getting replaced) and ID=0 (the 'new' drive) in order to mount the array in degraded mode and start over the replace operation with the method #2. In the end it worked, and the 12TB drive is fully functional. I suppose the USB enclosure is not the most reliable, but the next 2 replacement worked just find like the first one.

What I should have done: abort the replace operation, and start over.

4.c. Extend volume to complete drives

Now that all 4 of my drives are upgraded to 12TB in my RAID array, I extend the filesystem to use all of the available space:

bash sudo btrfs filesystem resize 1:max /mnt/volume1 sudo btrfs filesystem resize 2:max /mnt/volume1 sudo btrfs filesystem resize 3:max /mnt/volume1 sudo btrfs filesystem resize 4:max /mnt/volume1

5. Always keep a full backup !

Earlier, I mentioned using one of the 'new' 12TB drive as a backup of my data. Before I use it in the NAS, and therefore erase this backup, I assembled 2 of the old drives into my spare computer and once again did a full copy of my NAS data using rsync over the network. This took a long while again, but I wouldn't skip this step !

6. Conclusion: what did I learn ?

- Buying and using refurbished drives was very easy and the savings are great ! I saved approximately 40% compared to the new price. Only time will tell if this was a good deal. I hope to get at least 4 more years out of these drives. That's my goal at least...

- Replacing HDDs via a USB3 enclosure is possible with BTRFS, it works 3 time out of 4 ! 😭

- Serial debug is my new best friend ! This part, I didn't detail in this post. Let's say my NAS is somewhat exotic NanoPi M4V2, I couldn't have unborked my system without a functioning UART adapter, and the one I already had in hand didn't work correctly. I had to buy a new one. And all the things I did (blindly) to try fixing my system were pointless and wrong.

I hope this post can be useful to someone in the future, or at least was interesting to some of you !

I present: Managarr - A TUI and CLI to help you manage your Servarr instances

A TUI for managing *arr servers. Built with 🤎 in Rust - Dark-Alex-17/managarr

After almost 3 years of work, I've finally managed to get this project stable enough to release an alpha version!

I'm proud to present Managarr - A TUI and CLI for managing your Servarr instances! At the moment, the alpha version only supports Radarr.

Not all features are implemented for the alpha version, like managing quality profiles or quality definitions, etc.

Here's some screenshots of the TUI:

Additionally, you can use it as a CLI for Radarr; For example, to search for a new film:

managarr radarr search-new-movie --query "star wars"

Or you can add a new movie by its TMDB ID:

managarr radarr add movie --tmdb-id 1895 --root-folder-path /nfs/movies --quality-profile-id 1

All features available in the TUI are also available via the CLI.

Anyone self-hosting ActualBudget? (with connection to bank)

Hiya, I am looking into a few different services to better manage my finances, among the highest recommended ones there is ActualBudget. Actualbugdet itself is opensource and private, however, to get the most out of this service you may connect it to your bank, via a third party service. Has anyone here actually done this? The service (for EU folks) is called GoCardless. This however, to me is ringing many alarms..

Here is the screenshot showing the message before connecting to my bank..

Here GoCardless's list of partners/suppliers:

https://assets.ctfassets.net/40w0m41bmydz/6Mg3PGztGEQh11N3MNRmYc/1f186cf883151ca04b9c71c23b5ee4d3/GoCardless_material_supplier_list_v2024.09.pdf

I assume there is no private alternative that allows you to connect to your bank into AcualBudget or another service, if so please let me know! Managing finances would be so much more convenient if it all was automatically synced into a self-hosted service.

Let me know how you manage your finances :)

Store (and access) old emails

Yet another question about self-hosting email, but I haven't found the answer at least phrased in a way that makes sense with my question.

I've got ~15 GBs of old gmail data that I've already downloaded, and google is on my ass about "91% full" and we know I'm not about to pay them for storage (I'll sooner spend 100 hours trying to solve it myself before I pay them $3/month).

What I want is to have the same (or relatively close to the same) access and experience to find stuff in those old emails when they are stored on my hardware as I do when they are in my gmail. That is, I want to have a website and/or app that i search for emails from so-and-so, in some date-range, keywords. I don't actually want to send any emails from this server or receive anything to it (maybe I would want gmail to forward to it or something, but probably I'd just do another archive batch every year).

What I've tried so far, which is sort of working, is that I've set up docker-mailserver on my box, and that is working and accessible. I can connect to it via Thunderbird or K-9 mail. I also converted big email download from google, which was a .mbox, into maildir using mb2md (apt install mb2md on debian was nice). This gave me a directory with ~120k individual email files.

When I check this out in Thunderbird, I see all those emails (and they look like they have the right info) (as a side - I actually only moved 1k emails into the directory that docker-mailserver has access to, just for testing, and Thunderbird only sees that 1k then). I can do some searching on those.

When I open in K-9, it by default looks like it just pulls in 100 of them. I can pull in more or refresh it sort of thing. I don't normally use K-9, so I may just be missing how the functionality there is supposed to work.

I also just tried connecting to the mail server with Nextcloud Mail, which works in the sense that it connects but it (1) seems like it is struggling, and (2) is putting 'today' as the date for all the emails rather than when they actually came through. I don't really want to use Nextcloud Mail here...

So, I think my question here is now really around search and storage. In Thunderbird, I think that the way it works (I don't normally use Thunderbird much either) is that it downloads all the files locally, and then it will search them locally. In K-9 that appears to be the same, but with the caveat that it doesn't look like it really wants to download 120k emails locally (even if I can).

What I think I want to do, though, is have the search running on the server. Like I don't want to download 15GBs (and another 9 from gmail soon enough) to each client. I want it all on the server and just put in my search and the server do the query and give me a response.

docker-mailserver has a page for setting up Full-Text Search with Xapian, where it'll make all the indices and all that. I tinkered with this and think I got it set up. This is another sort of thing where I would want the search to be utilizing the server rather than client since the server is (hopefully) optimizing for some of this stuff.

Should I be using a different server for what I want here? I've poked around at different ones and am more than open to changing to something else that is more for what I need here.

For clients, should I be using Roundcube or something else? Will that actually help with this 'use the server to search' question? For mobile, is there any way to avoid downloading all the emails to the client?

Thanks for the help.

Excluding shorts from Youtube RSS feeds in FreshRSS, regardless of #shorts in the title

this is a really underdocumented feature that this extension supports, wanted to share it with people. i've never written and shared a blog post like this before so feel free to give me tips about documenting steps or point out any errors i made. i kinda take docker knowldge for granted, not sure if i should avoid that. here's the contents:

--- I came across documentation for this in the readme for the FreshRSS extension YoutubeChannel2RssFeed. The method involves running an instance of the Youtube-operational-API (there was a public instance that has been cease and desisted by Google, see here) and plugging the extension into it.

YoutubeChannel2RssFeed Extension

TL;DR install this extension

git clone https://github.com/cn-tools/cntools_FreshRssExtensions.git cd cntools_FreshRssExtension cp -r xExtension-YouTubeChannel2RssFeed <your_freshrss_data_directory>/config/www/freshrss/extensions

Youtube-operational-API instance

Here's a Docker compose.yml for running both

services: freshrss: image: lscr.io/linuxserver/freshrss:latest container_name: freshrss environment: - PUID=1000 - PGID=1000 - TZ=America/Los_Angeles volumes: - ./freshrss/config:/config ports: - "8811:80"" restart: unless-stopped youtube-operational-api: container_name: freshrss-yt-o-api image: benjaminloison/youtube-operational-api:latest restart: unless-stopped ports: - "8812:80" depends_on: - freshrss

Configuring extension

In FreshRSS, log in as admin and go to Configuration > Extensions. Turn on YoutubeChannel2RssFeed under User extensions and click the gear to configure.

Set Youtube Shorts to be marked as read or blocked completely. Enter the URL for your yt-o-api instance. Based on the above compose file it would be http://freshrss-yt-o-api:8812. Submit changes.

For me this worked immediately, no shorts ever show up in FreshRSS for my Youtube feeds. I haven't seen this documented anywhere else so I wanted to mirror it somewhere.

ChartDB - open-source database diagram visualization tool

Hi all, I’m one of the creators of ChartDB.

ChartDB to simplify database design and visualization, providing a powerful, intuitive tool that’s fully open-source. This database diagram tool is similar to traditional ones you can find: dbeaver, dbdiagram, drawsql, etc.

https://github.com/chartdb/chartdb

Key Features:

- Instant schema import with just one query.

- AI-powered export to generate DDL scripts for easy database migration.

- Supports multiple database types: PostgreSQL, MySQL, SQLite, Mssql, ClickHouse and more.

- Customizable ER diagrams to visualize your database structure.

- Fully open-source and easy to self-host.

Tech Stack:

- React + TypeScript

- Vite

- ReactFlow

- Shadcn-ui

- Dexie.js

Set up Tailscale with NGINX Proxy Manager

As requested by /u/[email protected], this is a walkthrough of how I set up NGINX Proxy Manager with a custom domain to give me the simplicity of DNS access to my services with the security of Tailscale to restrict public access. This works great for things that you want easy remote access to, but don't want to have open to the internet in general (unRAID GUI, Portainer, Immich, Proxmox, etc.)

Prerequesites

- A custom domain (obviously, because that's the whole point of this tutorial)

- A Tailscale account with your devices linked to it

Steps

-

On the server that you want to serve as the entry point into your network, install the NGINX Proxy Manager Docker container (you could absolutely use a different installation method, but I prefer Docker so that's how this guide will be written)

I. For this, I have a Raspberry Pi that is dedicated to being my network entry. This method is probably overkill for most, but for me it works wonders because I have multiple different devices working as servers and if one goes down I can still access the services hosted on the others.

II. I'm not going to go super in detail here, because there is plenty of documentation elsewhere but you install it the same way you would install any Docker container and follow the first time setup

-

Log into your Tailscale account and get the Tailscale IP for the entry device (ex. 100.113.123.123)

-

Get the SSL information from NGINX Proxy Manager for your domain

I. Navigate to "SSL Certificates" and then "Add SSL Certificate" !

II. Select "Let's Encrypt"

III. Type in your domain/subdomain name in the first box

IV. Enter your email address for Let's Encrypt

V. Select "Use a DNS Challenge"

VI. Select your DNS provider in the dropdown

VII. From here, you're all set for now. We will continue with this later !

-

In your domain DNS dashboard, you will need to do a few things (I use Cloudflare, but the process should be more or less the same with whatever provider you use):

I. Set up an A record that redirects the root of your domain (or a subdomain, depending on your configuration) to your Tailscale IP from step 2

II. Set up a wildcard redirect that points back to your domain root. This is important because it will redirect subdomain requests (i.e. service.example.org to your root example.org which then points to the Tailscale IP) !

III. (This is going to be dependent on your provider) Generate an API key for NGINX to use for domain verification, this can easily be achieved in the Cloudflare dashboard in the API key section. The key needs to have permissions for Zone.DNS !

-

Back in NGINX Proxy Manager, drop in your API key in the text box where it asks for it (you need to replace the sample key). !

-

The hard part is done, now it's just time to add in your services!

Here's an example of proxying Portainer through NGINX Proxy Manager:

-

Might be obvious, but open up NGINX Proxy Manager

-

Click "Add Proxy Host"

-

Type in the URL that you want to use for navigating to the host, I prefer subdomains (i.e. portainer.example.org) !

-

Type in the IP address and port for the service

I. Here's the neat part: because NGINX is running in Tailscale, you can connect to both other services in your tailnet or other devices running in your network that don't necessarily have Tailscale running on them.

II. An example of this, would be if you have two houses (yours and your friends), where you have services deployed at both locations. You can have NGINX reach out through Tailscale to the other device and proxy the service through your main network without needing to set it up twice. Neat, right?

III. Conversely, if you have a server running in your network that you cannot install Tailscale onto (for support reasons, security reasons, whatever), you can just use the internal IP for that device, as long as the device NGINX Proxy Manager is running on can access it.

-

Navigate to the SSL tab of the window, and select your recently generated Let's Encrypt certificate !

-

And you're done

Now, you can connect your phone or laptop to Tailscale, and navigate to the URL that you configured. You should see your service load up, with SSL, and you can access it normally. No more remembering IP addresses and port numbers! I don't personally meet this usecase, but this solution could also be useful for people running their homelab behind CGNAT where they can't open ports easily -- this would allow them to access any service remotely via Tailscale easily.

EDIT: The picture formatting is weird and I'm not really sure how else to do it. Let me know if there's a better way :)

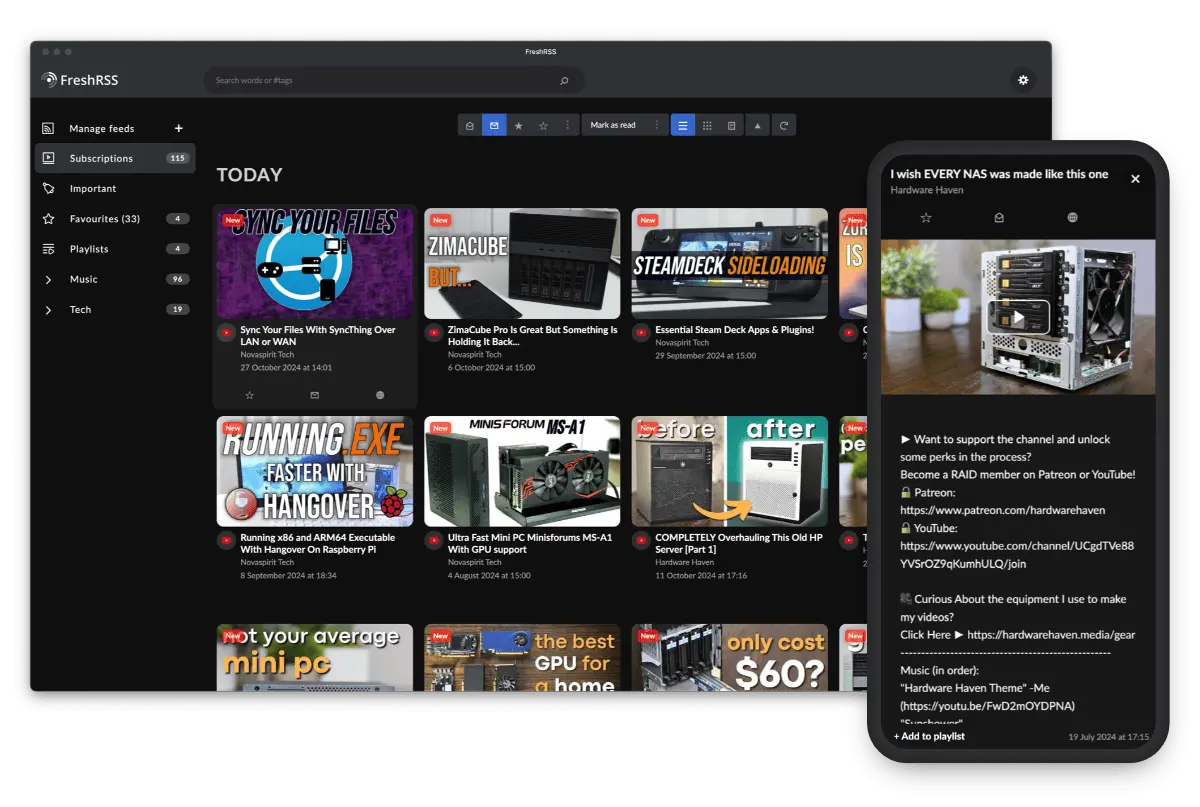

Browse FreshRSS like YouTube: "Youlag Theme for FreshRSS"

"Youlag Theme for FreshRSS" provides a video-focused browsing experience for your RSS subscriptions. It tries to provide a similar experience to YouTube, primarily through its layout using CSS, but also a little bit of Javascript.

Git repo for more details: https://github.com/civilblur/youlag

In case you're not aware, "FreshRSS is a self-hosted RSS feed aggregator".

---

The idea is that you subscribe to content creators through YouTube's RSS feed https://www.youtube.com/feeds/videos.xml?channel_id={id_goes_here}, then browse, watch, save to playlist ("label"), right within FreshRSS.

There's also third-party extension for FreshRSS that provides the ability to use Invidious, but as we all know, the public instances are unfortunately struggling quite a bit as of writing.

Static site generator for an idiot who doesn't want to learn a new templating language just to have a blog?

Hi,

I'm interested in setting up a small static-site-generator site. Looked at 11ty recently and feel pretty uncomfortable with the amount of javascript and "funny language" churn just to make some html happen.

Do you know of any alternative that's simpler / easier / less complicated dependencies? Or do you have an approach to 11ty that you think I should try?

Thanks in advance for any input, it's appreciated!

Ditching the VPN and port forwarding the selfhosted way

For folks that are unable to port forward on the local router (eg CGNAT) I made this post on doing it via a VPS. I've scoured the internet and didn't find a complete guide.

Some docker containers need manual start after host reboot

I generally let my server do its thing, but I run into an issue consistently when I install system updates and then reboot: Some docker containers come online, while others need to be started manually. All containers were running before the system shut down.

- My containers are managed with docker compose.

- Their compose files have

restart: always - It's not always the same containers that fail to come online

- Some of them depend on an NFS mount point being ready on the host, but not all

Host is running Ubuntu Noble

Most of these containers were migrated from my previous server, and this issue never manifested.

I wonder if anyone has ideas for what to look for?

SOLVED

The issue was that docker was starting before my NFS mount point was ready, and the containers which depended on it were crashing.

Symptoms:

journalctl -b0 -u docker showed the following log lines (-b0 means to limit logs to the most recent boot):

level=error msg="failed to start container" container=fe98f37d1bc3debb204a52eddd0c9448e8f0562aea533c5dc80d7abbbb969ea3 error="error while creating mount source path '/mnt/nas/REDACTED': mkdir /mnt/nas/REDACTED: operation not permitted" ... level=warning msg="ShouldRestart failed, container will not be restarted" container=fe98f37d1bc3debb204a52eddd0c9448e8f0562aea533c5dc80d7abbbb969ea3 daemonShuttingDown=true error="restart canceled" execDuration=5m8.349967675s exitStatus="{0 2024-10-29 00:07:32.878574627 +0000 UTC}" hasBeenManuallyStopped=false restartCount=0

I had previously set my mount directory to be un-writable if the NFS were not ready, so this lined up with my expectations.

I couldn't remember how systemd names mount points, but the following command helped me find it:

systemctl list-units -t mount | grep /mnt/nas

It gave me mnt-nas.mount as the name of the mount unit, so then I just added it to the After= and Requires= lines in my /etc/systemd/system/docker.service file:

[Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target docker.socket firewalld.service containerd.service time-set.target mnt-nas.mount Wants=network-online.target containerd.service Requires=docker.socket mnt-nas.mount ...

If you hoard video games and aren’t selfhosting GameVault yet, you’re missing out!

Hey everyone,

it’s me again, one of the two developers behind GameVault, a self-hosted gaming platform similar to how Plex/Jellyfin is for your movies and series, but for your game collection. If you've hoarded a bunch of games over the years, this app is going to be your best friend. Think of it as your own personal Steam, hosted on your own server.

If you haven’t heard of GameVault yet, you can check it out here and get started within 5 minutes—seriously, it’s a game changer.

For those who already know GameVault, or its old name He-Who-Must-Not-Be-Named, we are excited to tell you we just launched a major update. I’m talking a massive overhaul—so much so, that we could’ve rebuilt the whole thing from scratch. Here’s the big news: We’re no longer relying on RAWG or Google Images for game metadata. Instead, we’ve officially partnered with IGDB/Twitch for a more reliable and extended metadata experience!

But it doesn’t stop there. We’ve also rolled out a new plugin system and a metadata framework that allows you to connect to multiple metadata providers at once. It’s never been this cool to run your own Steam-like platform right from your good ol' 19" incher below your desk!

What’s new in this update?

- IGDB/Twitch Integration: Say goodbye to unreliable metadata scrapers. Now you can enjoy game info sourced directly from IGDB.

- Customizable Metadata: Edit and fine-tune game metadata with ease. Your changes are saved separately, so the original data stays intact.

- Plugin System: Build your own plugins for metadata or connect to as many sources as you want—unlimited flexibility!

- Parental Controls: Manage age-appropriate access for the family and children.

- Built-in Media Player: Watch game trailers and gameplay videos directly in GameVault.

- UI Overhaul: A fresh, streamlined look for the app, community, game and admin interface.

- Halloween Theme: For GameVault+ users, we’ve added a spooky Halloween skin just in time for the season!

Things to keep in mind when updating:

- GameVault Client v1.12 is now required for servers running v13 or above.

- Older clients won’t work on servers that have been updated to v13.

For a smooth update and a guide on how to use all these new features, check out the detailed migration instructions in the server changelogs. As always, if you hit any snags, feel free to reach out to us on Discord.

If you run into any issues or need help with the migration, feel free to join and open a ticket in our Discord community—we’re always happy to help!

If you want to support our pet-project and keep most upcoming features of GameVault free for everyone, consider subscribing to GameVault+ or making a one-time donation. Every little bit fuels our passion to keep building and improving!

Thanks for everything! We're more than 800 Members on our discord now and I can’t wait to hear what you think of the latest version.

Results comparison 8B parameter LLM x Gemini

My RTX 2060 computer running DarkPlanet 8B:

Me: What is isotony?

Bot: Isotony, a term coined by the mathematician and physicist Robert Hermann in the 1970s, refers to the property of a Lie algebra that ensures the commutator of any two elements is also an element of the algebra. In other words, isotony (or the isotonicity of the Lie bracket) means that the Lie algebra is closed under the commutator operation.

Formally, a Lie algebra G over a field k is said to be isotonic if for any u, v ∈ G, [u, v] ∈ G, where [ , ] denotes the Lie bracket (or commutator). This implies that the Lie algebra is a subset of itself under the commutator operation, making it a Lie subalgebra of itself.

Isotony has significant consequences in the theory of Lie algebras and their representations:

Cohomology: Isotony is a crucial condition for the development of Lie algebra cohomology, which studies the obstructions to extending Lie algebra homomorphisms. It ensures that the cohomology groups are well-defined.

Representations: The isotony property is essential for the classification of finite-dimensional representations of semisimple Lie algebras. It guarantees that the representation space remains unchanged under the commutator operation, facilitating the construction of higher-order tensors and invariant polynomials.

Geometry: In the context of differential geometry, isotonic Lie algebras are linked to the structure of geometric objects, such as tangent spaces and cotangent spaces. This relationship is exploited in the study of Lie groups, symplectic manifolds, and Poisson geometry.

Physics: In quantum mechanics and gauge theories, isotony is relevant to the definition of observables and their algebraic properties under commutation. The isotonicity of the Lie algebra of observables ensures that the commutator of any two observables remains an observable, which is vital for the consistency of the theory.

It's worth noting that not all Lie algebras are isotonic. For instance, the algebra of polynomial vector fields on ℝ² is not isotonic, since the commutator of two such fields may not be a polynomial vector field.

In summary, isotony is a fundamental property of Lie algebras that ensures their closure under the commutator operation. It has far-reaching implications for the classification of Lie algebras, their representations, cohomology, and connections to differential geometry and physics. Do you have any more questions about isotony or its applications?

Gemini:

Me: What is isotony?

Bot: "Isotonic" has different meanings depending on the context. Here are the most common ones:

- In Chemistry/Biology:

- Isotonic solutions: Refer to solutions that have the same osmotic pressure. This means they have the same concentration of solutes. Cells placed in an isotonic solution will neither shrink nor swell because there's no net movement of water across the cell membrane. This is important in biology and medicine, especially when administering fluids intravenously.

- In Exercise Physiology:

- Isotonic muscle contractions: Describe muscle contractions where the muscle changes length while maintaining a constant tension. There are two types:

- Concentric contractions: The muscle shortens as it contracts (e.g., lifting a weight).

- Eccentric contractions: The muscle lengthens as it contracts (e.g., lowering a weight).

- Other less common meanings:

- In music: Refers to equal tones or intervals.

- In mathematics: Can sometimes refer to functions that preserve order (though "order-preserving" or "monotone" are more common terms in this context).

To understand the specific meaning of "isotonic," it's essential to consider the context in which it's used.

Here are some examples to illustrate the different meanings:

- "The doctor administered an isotonic saline solution to the patient." (Chemistry/Biology)

- "Lifting a dumbbell is an example of an isotonic concentric contraction." (Exercise Physiology)

- "The two notes played an isotonic interval." (Music)

If you can provide more context about where you encountered the term "isotonic," I can help you pinpoint its specific meaning.

------

Both were given a mathematical space context before asking the question.

Penpot: An open-source design tool for design & code collaboration

Penpot: The open-source design tool for design and code collaboration - penpot/penpot

Designers can create stunning designs, interactive prototypes, and design systems at scale, while developers enjoy ready-to-use code and make their workflow easy and fast.

Designed for developers

Penpot was built to serve both designers and developers and create a fluid design-code process. You have the choice to enjoy real-time collaboration or play "solo".

Inspect mode

Work with ready-to-use code and make your workflow easy and fast. The inspect tab gives instant access to SVG, CSS and HTML code.

Self host your own instance

Provide your team or organization with a completely owned collaborative design tool. Use Penpot's cloud service or deploy your own Penpot server.

Integrations

Penpot offers integration into the development toolchain, thanks to its support for webhooks and an API accessible through access tokens.

Self hosting instructions (via docker) can be found here: https://help.penpot.app/technical-guide/getting-started/#install-with-docker

wireguard docker client error with ip6_tables

Hi! I am trying to set up a wireguard client in docker. I use the linuxserver image, I it running in server mode on a different machine (exactly the same ubuntu version) and i can login with my laptop to the wireguard server, but the docker wg-client has problems, i hope someone has an idea :)

The client docker container has trouble starting and throws this error:

[___](modprobe: FATAL: Module ip6_tables not found in directory /lib/modules/6.8.0-47-generic ip6tables-restore v1.8.10 (legacy): ip6tables-restore: unable to initialize table 'raw' Error occurred at line: 1 Try 'ip6tables-restore -h' or 'ip6tables-restore --help' for more information. )

I copied the config to the server with the wg server running, it has the same problem with the client.

I can ping google.com from inside the server container, but not from inside the client container.

Here is the output of the 'route' cmd from the client:Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 172.18.0.0 * 255.255.0.0 U 0 0 0 eth0

I searched for a solution quite a bit, but cant seem to find something that works. changed the .yml compose file according to some suggestions but without success.

I tried to install the missing module but could not get it working.

Its a completely clean install of ubuntu 24.04.1 LTS, Kernel: Linux 6.8.0-47-generic.

here is the compose file, in case its needed, it should be exact same one as provided by linux-server in their github:

compose file:

``` services: wireguard: image: lscr.io/linuxserver/wireguard:latest container_name: wireguard-client cap_add: - NET_ADMIN - SYS_MODULE #optional environment: - PUID=1000 - PGID=1000 - TZ=Europe/Berlin

- SERVERURL=wireguard.domain.com #optional

- SERVERPORT=51820 #optional

- PEERS=1 #optional

- PEERDNS=auto #optional

- INTERNAL_SUBNET=10.13.13.0 #optional

- ALLOWEDIPS=0.0.0.0/0 #optional

- PERSISTENTKEEPALIVE_PEERS= #optional

- LOG_CONFS=true #optional

volumes: - /srv/wireguard/config:/config

- /lib/modules:/lib/modules #optional

ports: - 51820:51820/udp sysctls: - net.ipv4.conf.all.src_valid_mark=1 restart: unless-stopped ```

here is the complete error log from the wg-client docker:

error

``` [migrations] started [migrations] no migrations found usermod: no changes ───────────────────────────────────────

██╗ ███████╗██╗ ██████╗ ██║ ██╔════╝██║██╔═══██╗ ██║ ███████╗██║██║ ██║ ██║ ╚════██║██║██║ ██║ ███████╗███████║██║╚██████╔╝ ╚══════╝╚══════╝╚═╝ ╚═════╝

Brought to you by linuxserver.io ───────────────────────────────────────

To support the app dev(s) visit: WireGuard: https://www.wireguard.com/donations/

To support LSIO projects visit: https://www.linuxserver.io/donate/

─────────────────────────────────────── GID/UID ───────────────────────────────────────

User UID: 1000 User GID: 1000 ─────────────────────────────────────── Linuxserver.io version: 1.0.20210914-r4-ls55 Build-date: 2024-10-10T11:23:38+00:00 ───────────────────────────────────────

Uname info: Linux ec3813b50277 6.8.0-47-generic #47-Ubuntu SMP PREEMPT_DYNAMIC Fri Sep 27 21:40:26 UTC 2024 x86_64 GNU/Linux **** It seems the wireguard module is already active. Skipping kernel header install and module compilation. **** **** Client mode selected. **** [custom-init] No custom files found, skipping... **** Disabling CoreDNS **** **** Found WG conf /config/wg_confs/peer1.conf, adding to list **** **** Activating tunnel /config/wg_confs/peer1.conf **** [#] ip link add peer1 type wireguard [#] wg setconf peer1 /dev/fd/63 [#] ip -4 address add 10.13.13.2 dev peer1 [#] ip link set mtu 1420 up dev peer1 [#] resolvconf -a peer1 -m 0 -x s6-rc: fatal: unable to take locks: Resource busy [#] wg set peer1 fwmark 51820 [#] ip -6 route add ::/0 dev peer1 table 51820 [#] ip -6 rule add not fwmark 51820 table 51820 [#] ip -6 rule add table main suppress_prefixlength 0 [#] ip6tables-restore -n modprobe: FATAL: Module ip6_tables not found in directory /lib/modules/6.8.0-47-generic ip6tables-restore v1.8.10 (legacy): ip6tables-restore: unable to initialize table 'raw' Error occurred at line: 1 Try `ip6tables-restore -h' or 'ip6tables-restore --help' for more information. [#] resolvconf -d peer1 -f s6-rc: fatal: unable to take locks: Resource busy [#] ip -6 rule delete table 51820 [#] ip -6 rule delete table main suppress_prefixlength 0 [#] ip link delete dev peer1 **** Tunnel /config/wg_confs/peer1.conf failed, will stop all others! **** **** All tunnels are now down. Please fix the tunnel config /config/wg_confs/peer1.conf and restart the container **** [ls.io-init] done. ```

Thanks a lot. I appreciate every input!

Any recommendation for a cheap, small #firewall for my #homelab ? I realized I can’t control easily what goes out of my network only via DNS block lists

Tasks.md 2.5.3 released

Hey guys, version 2.5.3 of Tasks.md just got released!

This release is actually pretty small, as I focused a lot on resolving technical debt, fixing visual inconsistencies and improving "under the hood" stuff. Which I will continue to do a little bit more before the next release.

For those who don't know, Tasks.md is a self-hosted, Markdown file based task management board. It's like a kanban board that uses your filesystem as a database, so you can manipulate all cards within the app or change them directly through a text editor, changing them in one place will reflect on the other one.

The latest release includes the following:

- Feature: Generate an initial color for a new tags based on their names

- Feature: Add new tag name input validation

- Fix: Use environment variables in Dockerfile ENTRYPOINT

- Fix: Allow dragging cards when sort is applied

- Fix: Fix many visual issues

Edit: Updated with the correct link, sorry for the confusion! The fact that someone created another application with the same name I used for the one I made is really annoying

I host tt-rss in docker and use Tiny Tiny RSS in GrapheneOS.

Docker's documentation for supported backing filesystems for container filesystems.

In general, you should be considering your container root filesystems as completely ephemeral. But, you will generally want low latency and local. If you move most of your data to NFS, you can hopefully just keep a minimal local disk for images/containers.

As for your data volumes, it's likely going to be very application specific. I've got Postgres databases running off remote NFS, that are totally happy. I don't fully understand why Plex struggles to run it's Database/Config dir from NFS. Disappointingly, I generally have to host it on a filesystem and disk local to my docker host.

@NuXCOM_90Percent thats strange. i've been on alpha for a while and it is working and improving with every release.

@NuXCOM_90Percent I'm using tt-rss ( https://tt-rss.org ) and it works very well for me. It's very easy to deploy with docker, you can apply filters and organize your RSS into categories.

In my android phone I use feeder

I'm still early enough in that if something's wrong or not ideal about the config, I can go scorched earth and have the whole thing back up and running in an hour or two.

Is there a better filesystem that I could share out for this kind of thing? My RAID Array is run through OpenMediaVault if that helps.

I run the Mistral-Nemo(12B) and Mistral-Small (22B) on my GPU and they are pretty code. As others have said, the GPU memory is one of the most limiting factors. 8B models are decent, 15-25B models are good and 70B+ models are excellent (solely based on my own experience). Go for q4_K models, as they will run many times faster than higher quantization with little performance degradation. They typically come in S (Small), M (Medium) and (Large) and take the largest which fits in your GPU memory. If you go below q4, you may see more severe and noticeable performance degradation.

If you need to serve only one user at the time, ollama +Webui works great. If you need multiple users at the same time, check out vLLM.

Edit: I'm simplifying it very much, but hopefully should it is simple and actionable as a starting point. I've also seen great stuff from Gemma2-27B

Edit2: added links

Edit3: a decent GPU regarding bang for buck IMO is the RTX 3060 with 12GB. It may be available on the used market for a decent price and offers a good amount of VRAM and GPU performance for the cost. I would like to propose AMD GPUs as they offer much more GPU mem for their price but they are not all as supported with ROCm and I'm not sure about the compatibility for these tools, so perhaps others can chime in.

Edit4: you can also use openwebui with vscode with the continue.dev extension such that you can have a copilot type LLM in your editor.

LLMs use a ton of VRAM, the more VRAM you have the better.

If you just need an API, then TabbyAPI is pretty great.

If you need a full UI, then Oogabooga's TextGenration WebUI is a good place to start

TinyLLM on a separate computer with 64GB RAM and a 12-core AMD Ryzen 5 5500GT, using the rocket-3b.Q5_K_M.gguf model, runs very quickly. Most of the RAM is used up by other programs I run on it, the LLM doesn't take the lion's share. I used to self host on just my laptop (5+ year old Thinkpad with upgraded RAM) and it ran OK with a few models but after a few months saved up for building a rig just for that kind of stuff to improve performance. All CPU, not using GPU, even if it would be faster, since I was curious if CPU-only would be usable, which it is. I also use the LLama-2 7b model or the 13b version, the 7b model ran slow on my laptop but runs at a decent speed on a larger rig. The less billions of parameters, the more goofy they get. Rocket-3b is great for quickly getting an idea of things, not great for copy-pasters. LLama 7b or 13b is a little better for handing you almost-exactly-correct answers for things. I think those models are meant for programming, but sometimes I ask them general life questions or vent to them and they receive it well and offer OK advice. I hope this info is helpful :)

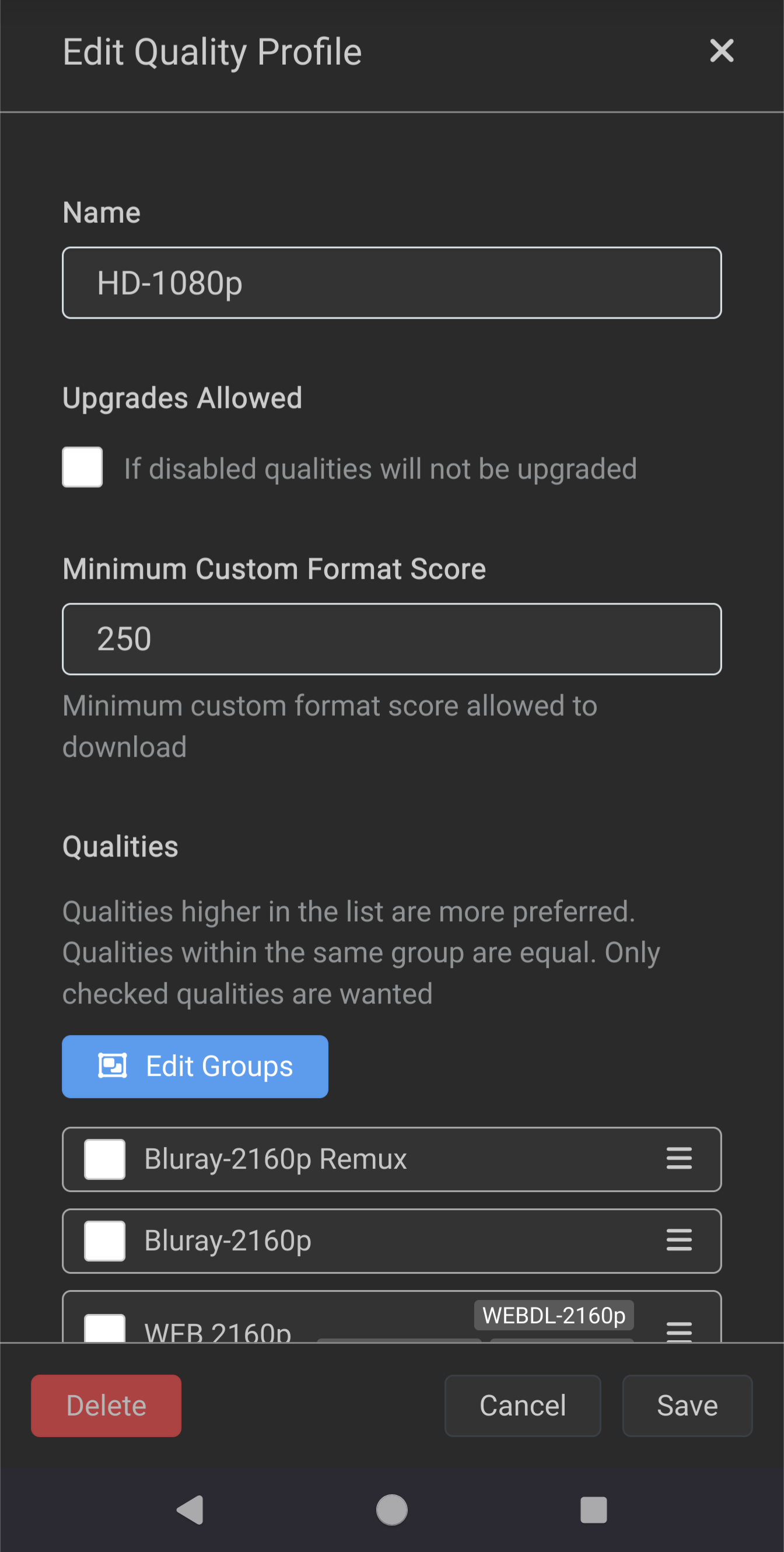

I can actually help ya out with this. Not sure why Sonarr no longer let's you select the language profile (it used to).

For adding extra requirement (eg language, group, etc) you need to set up a custom format, give it a score/weight in your profile(s) and then set the minimum score required to get you what you want.

Custom format

Add a language format

Then add the language condition and pick the language you want. You can add multiple conditions

Then go to your profiles and add weighting and requirement to each profile

I cannot for the life of me figure out the formatting. I'm so sorry lol

Something you might want to look into is using mTLS, or client certificate authentication, on any external facing services that aren't intended for anybody but yourself or close friends/family. Basically, it means nobody can even connect to your server without having a certificate that was pre-generated by you. On the server end, you just create the certificate, and on the client end, you install it to the device and select it when asked.

The viability of this depends on what applications you use, as support for it must be implemented by its developers. For anything only accessed via web browser, it's perfect. All web browsers (except Firefox on mobile...) can handle mTLS certs. Lots of Android apps also support it. I use it for Nextcloud on Android (so Files, Tasks, Notes, Photos, RSS, and DAVx5 apps all work) and support works across the board there. It also works for Home Assistant and Gotify apps. It looks like Immich does indeed support it too. In my configuration, I only require it on external connections by having 443 on the router be forwarded to 444 on the server, so I can apply different settings easily without having to do any filtering.

As far as security and privacy goes, mTLS is virtually impenetrable so long as you protect the certificate and configure the proxy correctly, and similar in concept to using Wireguard. Nearly everything I publicly expose is protected via mTLS, with very rare exceptions like Navidrome due to lack of support in subsonic clients, and a couple other things that I actually want to be universally reachable.

In my experience, using spinning disks, the performance is very poor, and times for scrub and resilver are very long. For example, in a raidz1 with 4x8TB, scrubbing takes 2-3 weeks and resilvering takes almost 2 months. I must also add very poor performance in degraded state. This is a very old post, but things are still the same.

I'll look around, I haven't done any work with the AP in years though.

Nope, no access. I'm not sure if their process but I found this.

API Keys

An API key is the access key required for making API calls. If you have not received your API key, please contact AP Customer Support.

This is actually very easy. You can copy the files from the container, even while it's not running, onto your host system to edit there, and then copy them back afterwards.

See the top answer on https://stackoverflow.com/questions/22907231/how-to-copy-files-from-host-to-docker-container for step by step instructions on how to do this.

I recommend following self-hosting communities like this and /r/selfhosted. There are some resources I found helpful to know relevant concepts & tools. Ultimately , the most useful guide is the cycle of self-hosting, failing, and learning.

Some relevant resources to start with (Can't vouch for them, but they worth checking out)

- UNIX and Linux System Administration Handbook: Nemeth, Evi, Snyder, Garth, Hein, Trent, Whaley, Ben, Mackin, Dan: 9780134277554: Amazon.com: Books

- Steadfast Self-Hosting - 📖 Home

- about_this_site [Self Hosting Manual]

- mikeroyal/Self-Hosting-Guide: Self-Hosting Guide. Learn all about locally hosting (on premises & private web servers) and managing software applications by yourself or your organization. Including Cloud, LLMs, WireGuard, Automation, Home Assistant, and Networking.

- r/SelfHosted Wiki

- Server Setup Basics for Self Hosting | Hacker News

Security is top priority, so guides about that:

- [Guide] Securing A Linux Server : selfhosted

- justSem/r-selfhosted-security: Started from the beginners security guide on r/selfhosted - this repo aims to be a collection of guides

You can skim through various resources, check what you believe is important to know (security, backup, network, etc.), learn those, be confident, setup a basic functional server and go from there. The most important advice: read with the intention of learning, if you read a term that you think is very important but you didn't know what it is, go and search what it means; this will save you so much headache later on.

@[email protected] based on your output it looks like it’s listening to 2284 on IPv4 and it’s only listening to 2283 on IPv6

I'm sure I've seen paid software that will detect and read data from several popular hardware controllers. Maybe there's something free that can do the same.

For the future, I'd say that with modern copy on write filesystems, so long as you don't mind the long rebuild on power failures, software raid is fine for most people.

I found this, which seems to be someone trying to do something similar with a drive array built with an Intel raid controller

Note, they are using drive images, you should be too.

Thank you ! It was a cool design project, but I ended up no publishing the design as it is quite difficult to assemble and work with. here are a couple more photos:

I had use Duplicati and Kopia. Duplicati works kinda well, the problem is the installation is such a pain in the ass, actually it never work as intended, that's why I switched to Kopia, the installation is a lot easier, have GUI and and system tray icon, have better documentation, the Google drive backups with GUI requires a liltle configuration of rClone , nothing crazy

For those on the fence about Borg Backup because it's a command line app, FYI there's a great frontend GUI for it called Vorta (yeah, in line with the Trek theme lol) that works really well. I don't see it mentioned often, thought I'd pass that along. Might want to avoid the Flatpak version if you need to back up stuff outside your /home dir.