G. F. Händel, Alcina, HWV 34, Act 2, Scene 12 – Recitativo accompagnato: Ah! Ruggiero crudel & Aria: Ombre pallide (Alcina) – Konzerthaus Wien, 26 May 2021

YouTube Video

Click to view this content.

15 tracks from Cyberpunk 2077: Original Score arranged for piano

Direct link to PDF: https://cdn-s-cyberpunk.cdprojektred.com/cp2077-songbook-digital.pdf

15 tracks from Cyberpunk 2077: Original Score arranged for piano

Direct link to PDF: https://cdn-s-cyberpunk.cdprojektred.com/cp2077-songbook-digital.pdf

ESM3, an AI biology model, is available in three sizes. The smallest one is open, while the medium and large versions are available for commercial use via API.

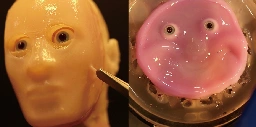

A technique for attaching a skin made from living human cells to a robotic framework could give robots the ability to emote and communicate better

A technique for attaching a skin made from living human cells to a robotic framework could give robots the ability to emote and communicate better

This smiling robot face made of living skin is absolute nightmare fuel

Giving robots a human-like exterior has been the standard for years — centuries even. But giving them actual, living skin that can be manipulated into

Original publication: https://www.cell.com/cell-reports-physical-science/fulltext/S2666-3864(24)00335-7

Stability AI stablized by investment from Silcon Valley royalty, new executive team

Text-to-image AI outfit can now buy time to build products and profit

The YouTuber shared his 'side of the story' on inappropriate messages with a minor

Nvidia loses a cool $500B as market questions AI boom

Cisco was briefly the world's most valuable company too, you know, just before the dot com bust

Many of the large language models that power chatbots claim to be open, but restrict access to code and training data.

Without paywall: https://archive.ph/4Du7B Original conference paper: https://dl.acm.org/doi/10.1145/3630106.3659005

Many of the large language models that power chatbots claim to be open, but restrict access to code and training data.

Without paywall: https://archive.ph/4Du7B Original conference paper: https://dl.acm.org/doi/10.1145/3630106.3659005

In this week's DF Direct, I spend a bit of time looking into the Starfield modding scene - more specifically checking o…

The first paid DLC for Bethesda's big space RPG is overpriced, paltry, and emphasizes the game's lifelessness

Valve's 64GB and 512GB Steam Deck LCD models are both less than $390 until next month

Days after allegations that he was banned from Twitch for inappropriately texting a minor, Dr Disrespect says he's taking a 'vacation' from streaming.

"What leadership do you have?"

Three side remarks about China, which can be a peculiar example to compare to for Russia, maybe even any other country:

- They actually banned consoles for a quite significant 15 years (2000–2015), which strongly tilted their market towards PC.

- Their companies actively make PC-type gaming handhelds, and many of them are even well-established in the business ahead the current “Steam Deck” wave/bandwagon: GPD (once called GamePad Digital, first release in 2016), OneXPlayer (2020), Ayaneo (2021).

- Chinese gaming companies are quite at the whim of the censorship, and occasional “crackdowns” out of the blue, and many have therefore reoriented themselves for an international audience to de-risk their business.

Just for reference, a few years back, (ex-Microsoft) David Plummer had this historical dive into the (MIPS) origin of the blue color, and how Windows is not blue anymore: https://youtu.be/KgqJJECQQH0?t=780

The rumor is 1.76 trillion, or 8x220B (mixture of experts) to be specific: https://wandb.ai/byyoung3/ml-news/reports/AI-Expert-Speculates-on-GPT-4-Architecture---Vmlldzo0NzA0Nzg4

Likely due to being a prototype. Production laptops from Tuxedo tend to have the “TUX” penguin in a circle logo on the Super key by default. They also have been offering custom engraved keyboard (even with the entire keyboard engraved from scratch to the customer’s specifications) as added service, so probably there will be suppliers or production facility to change the Super key.

By the way, there was one YouTube channel that ended up ordering a laptop with Windings engraving from them: https://youtu.be/nidnvlt6lzw?t=186

If you want RTX though (does it work properly on Linux?)

Yes it does. For example, Hans-Kristian Arntzen declared the DirectX Raytracing (DXR) implementation in VKD3D-proton as feature complete in February 2023 (https://github.com/HansKristian-Work/vkd3d-proton/issues/154#issuecomment-1434761594). And since November 2023/release 2.11, VKD3D-proton in fact runs with DXR enabled by default (https://github.com/HansKristian-Work/vkd3d-proton/releases/tag/v2.11).

How does this analogy work at all? LoRA is chosen by the modifier to be low ranked to accommodate some desktop/workstation memory constraint, not because the other weights are “very hard” to modify if you happens to have the necessary compute and I/O. The development in LoRA is also largely directed by storage reduction (hence not too many layers modified) and preservation of the generalizability (since training generalizable models is hard). The Kronecker product versions, in particular, has been first developed in the context of federated learning, and not for desktop/workstation fine-tuning (also LoRA is fully capable of modifying all weights, it is rather a technique to do it in a correlated fashion to reduce the size of the gradient update). And much development of LoRA happened in the context of otherwise fully open datasets (e.g. LAION), that are just not manageable in desktop/workstation settings.

This narrow perspective of “source” is taking away the actual usefulness of compute/training here. Datasets from e.g. LAION to Common Crawl have been available for some time, along with training code (sometimes independently reproduced) for the Imagen diffusion model or GPT. It is only when e.g. GPT-J came along that somebody invested into the compute (including how to scale it to their specific cluster) that the result became useful.

This is a very shallow analogy. Fine-tuning is rather the standard technical approach to reduce compute, even if you have access to the code and all training data. Hence there has always been a rich and established ecosystem for fine-tuning, regardless of “source.” Patching closed-source binaries is not the standard approach, since compilation is far less computational intensive than today’s large scale training.

Java byte codes are a far fetched example. JVM does assume a specific architecture that is particular to the CPU-dominant world when it was developed, and Java byte codes cannot be trivially executed (efficiently) on a GPU or FPGA, for instance.

And by the way, the issue of weight portability is far more relevant than the forced comparison to (simple) code can accomplish. Usually today’s large scale training code is very unique to a particular cluster (or TPU, WSE), as opposed to the resulting weight. Even if you got hold of somebody’s training code, you often have to reinvent the wheel to scale it to your own particular compute hardware, interconnect, I/O pipeline, etc.. This is not commodity open source on your home PC or workstation.

The situation is somewhat different and nuanced. With weights there are tools for fine-tuning, LoRA/LoHa, PEFT, etc., which presents a different situation as with binaries for programs. You can see that despite e.g. LLaMA being “compiled”, others can significantly use it to make models that surpass the previous iteration (see e.g. recently WizardLM 2 in relation to LLaMA 2). Weights are also to a much larger degree architecturally independent than binaries (you can usually cross train/inference on GPU, Google TPU, Cerebras WSE, etc. with the same weights).

There is even a sentence in README.md that makes it explicit:

The source files in this repo are for historical reference and will be kept static, so please don’t send Pull Requests suggesting any modifications to the source files […]

Probably from the FAQ pane on the Kickstarter page:

What about Steamdeck support?

Will be 100% supported

Last updated: Tue, April 23 2024 10:55 AM PDT

There has been:

The plot twist is, however, that the delinquent (ex-) PI in question, Jeffry Isaacson, is a retired professor since 2022. So they cannot even fire him (or tenure-revoke, hypothetically speaking).

If you have to ask the price… ;-) The Nvidia B100 is known to cost between $30k and $40k. https://www.cnbc.com/2024/03/19/nvidias-blackwell-ai-chip-will-cost-more-than-30000-ceo-says.html

He was criticized also because the girls were not in danger of becoming infected. See e.g. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6724388/ :

The Chinese episode has also generated other issues. Several notes demonstrate that this was an experiment and not a therapeutic intervention (even He Jiankui called it a 'clinical trial'). The babies were not at risk of being born with HIV, given that sperm washing had been used so that only non-infected genetic material was used. Further, even though one of the parents (or both) was infected, it did not mean the children were more prone to becoming infected. The risk of becoming infected by the parents' virus was very low (Cowgill et al., 2008). In sum, there was no curative purpose, nor even the intention to prevent a pressing risk. Finally, the interventions were different for each twin. In one case, the two copies of CCR5 were modified, whereas in the other only one copy was modified. This meant that one twin could still become infected, although the evolution of the disease would probably be slower. The purpose of the scientific team was apparently to monitor the evolution of both babies and the differences in how they reacted to their different genetic modifications. This note also raised the issue of parents' informed consent regarding human experimentation, which follows a much stricter regimen than consent for therapeutic procedures.

Other critical articles (e.g. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8524470/) have also cited in particular https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4779710/, which states in the result section:

No HIV transmission occurred in 11,585 cycles of assisted reproduction using washed semen among 3,994 women (95% confidence interval [CI] = 0–0.0001). Among the subset of HIV-infected men without plasma viral suppression at the time of semen washing, no HIV seroconversions occurred among 1,023 women following 2,863 cycles of assisted reproduction using washed semen (95%CI= 0–0.0006). Studies that measured HIV transmission to infants reported no cases of vertical transmission (0/1,026, 95% CI= 0–0.0029). Overall, 56.3% (2,357/4,184, 95%CI=54.8%–57.8%) of couples achieved a clinical pregnancy using washed semen.

GIMP is a special case. GIMP is being getting outdeveloped by Krita these days. E.g.:

https://gitlab.gnome.org/GNOME/gimp/-/issues/9284

Or compare with:

https://www.phoronix.com/news/Krita-2024-GPUs-AI

GIMP had its share of self inflicted wounds starting with a toxic mailing list that drove away people from professional VFX and surrounding FilmGimp/CinePaint. When the GIMP people subsequently took over the GEGL development from Rhythm & Hues, it took literally 15 years until it barely worked.

Now we are past the era of simple GPU processing into diffusion models/“generative AI” and GIMP is barely keeping up with simple GPU processing (like resizing, see above).

Have people actually checked the versions there before making the suggestion?

F-Droid: Version 3.5.4 (13050408) suggested Added on Feb 23, 2023

Google Play: Updated on Aug 27, 2023

https://f-droid.org/en/packages/org.videolan.vlc/

https://play.google.com/store/apps/details?id=org.videolan.vlc

The problem seems to be squarely with VLC themselves.

I can’t see this “news” reported anywhere else

Clearly not trying very hard:

https://www.bbc.com/news/live/world-68642036 (8:40, “Russian TV airs fake video blaming Ukraine for Moscow attack”)

https://www.motherjones.com/politics/2024/03/deepfake-video-terror-moscow-putin-ukraine-claims/

and The Sun is notorious for making stuff up

And this article cites — with direct link — a BBC reporter’s X post. Well, who is actually “making stuff up” here…

Yet, BBC Verify journalist Shayan Sardarizadeh stated that an AI-generated audio was dubbed over a mismatch of two recent Ukrainian TV interviews.

Exposing the sham, he wrote on X: "The deepfake video has in fact been created as a composite of two recent interviews published in the last few days with Danilov and Ukraine's military intelligence chief Kyrylo Budanov.

From my own statistics how many I feel worthy posting/linking on Lemmy, the most direct alternative to Kotaku is Eurogamer. PCGamer, PCGamesN and Rock Paper Shotgun are occasionally OK, but you have to cut through a lot of spam and clickbait (i.e. exactly this “50 guides per week” type of corporate guidance). Not sure if this is also the state that Kotaku will end up in. The Verge sometimes also have good articles, but the flood of gadget consumerism articles there is obnoxious.

In other words, there may be downsides just to placing CS within an engineering school, let alone making it an independent college. Left entirely to themselves, computer scientists can forget that computers are supposed to be tools that help people. Georgia Tech’s College of Computing worked “because the culture was always outward-looking. We sought to use computing to solve others’ problems,” Guzdial said. But that may have been a momentary success. Now, at Michigan, he is trying to rebuild computing education from scratch, for students in fields such as French and sociology. He wants them to understand it as a means of self-expression or achieving justice—and not just a way of making software, or money.

—

Early in my undergraduate career, I decided to abandon CS as a major. Even as an undergraduate, I already had a side job in what would become the internet industry, and computer science, as an academic field, felt theoretical and unnecessary. Reasoning that I could easily get a job as a computer professional no matter what it said on my degree, I decided to study other things while I had the chance.

I have a strong memory of processing the paperwork to drop my computer-science major in college, in favor of philosophy. I walked down a quiet, blue-tiled hallway of the engineering building. All the faculty doors were closed, although the click-click of mechanical keyboards could be heard behind many of them. I knocked on my adviser’s door; she opened it, silently signed my paperwork without inviting me in, and closed the door again. The keyboard tapping resumed. The whole experience was a product of its time, when computer science was a field composed of oddball characters, working by themselves, and largely disconnected from what was happening in the world at large. Almost 30 years later, their projects have turned into the infrastructure of our daily lives. Want to find a job? That’s LinkedIn. Keep in touch? Gmail, or Instagram. Get news? A website like this one, we hope, but perhaps TikTok. My university uses a software service sold by a tech company to run its courses. Some things have been made easier with computing. Others have been changed to serve another end, like scaling up an online business.

The struggle to figure out the best organizational structure for computing education is, in a way, a microcosm of the struggle under way in the computing sector at large. For decades, computers were tools used to accomplish tasks better and more efficiently. Then computing became the way we work and live. It became our culture, and we began doing what computers made possible, rather than using computers to solve problems defined outside their purview. Tech moguls became famous, wealthy, and powerful. So did CS academics (relatively speaking). The success of the latter—in terms of rising student enrollments, research output, and fundraising dollars—both sustains and justifies their growing influence on campus.

If computing colleges have erred, it may be in failing to exert their power with even greater zeal. For all their talk of growth and expansion within academia, the computing deans’ ambitions seem remarkably modest. Martial Hebert, the dean of Carnegie Mellon’s computing school, almost sounded like he was talking about the liberal arts when he told me that CS is “a rich tapestry of disciplines” that “goes far beyond computers and coding.” But the seven departments in his school correspond to the traditional, core aspects of computing plus computational biology. They do not include history, for example, or finance. Bala and Isbell talked about incorporating law, policy, and psychology into their programs of study, but only in the form of hiring individual professors into more traditional CS divisions. None of the deans I spoke with aspires to launch, say, a department of art within their college of computing, or one of politics, sociology, or film. Their vision does not reflect the idea that computing can or should be a superordinate realm of scholarship, on the order of the arts or engineering. Rather, they are proceeding as though it were a technical school for producing a certain variety of very well-paid professionals. A computing college deserving of the name wouldn’t just provide deeper coursework in CS and its closely adjacent fields; it would expand and reinvent other, seemingly remote disciplines for the age of computation.

Near the end of our conversation, Isbell mentioned the engineering fallacy, which he summarized like this: Someone asks you to solve a problem, and you solve it without asking if it’s a problem worth solving. I used to think computing education might be stuck in a nesting-doll version of the engineer’s fallacy, in which CS departments have been asked to train more software engineers without considering whether more software engineers are really what the world needs. Now I worry that they have a bigger problem to address: how to make computer people care about everything else as much as they care about computers.

Ian Bogost is a contributing writer at The Atlantic.

Technology Universities Have a Computer-Science Problem The case for teaching coders to speak French By Ian Bogost

[Photo of college students working at their computers as part of a hackathon at Berkeley in 2018] Max Whittaker / The New York Times / Redux

March 19, 2024

Last year, 18 percent of Stanford University seniors graduated with a degree in computer science, more than double the proportion of just a decade earlier. Over the same period at MIT, that rate went up from 23 percent to 42 percent. These increases are common everywhere: The average number of undergraduate CS majors at universities in the U.S. and Canada tripled in the decade after 2005, and it keeps growing. Students’ interest in CS is intellectual—culture moves through computation these days—but it is also professional. Young people hope to access the wealth, power, and influence of the technology sector.

That ambition has created both enormous administrative strain and a competition for prestige. At Washington University in St. Louis, where I serve on the faculty of the Computer Science & Engineering department, each semester brings another set of waitlists for enrollment in CS classes. On many campuses, students may choose to study computer science at any of several different academic outposts, strewn throughout various departments. At MIT, for example, they might get a degree in “Urban Studies and Planning With Computer Science” from the School of Architecture, or one in “Mathematics With Computer Science” from the School of Science, or they might choose from among four CS-related fields within the School of Engineering. This seepage of computing throughout the university has helped address students’ booming interest, but it also serves to bolster their demand.

Another approach has gained in popularity. Universities are consolidating the formal study of CS into a new administrative structure: the college of computing. MIT opened one in 2019. Cornell set one up in 2020. And just last year, UC Berkeley announced that its own would be that university’s first new college in more than half a century. The importance of this trend—its significance for the practice of education, and also of technology—must not be overlooked. Universities are conservative institutions, steeped in tradition. When they elevate computing to the status of a college, with departments and a budget, they are declaring it a higher-order domain of knowledge and practice, akin to law or engineering. That decision will inform a fundamental question: whether computing ought to be seen as a superfield that lords over all others, or just a servant of other domains, subordinated to their interests and control. This is, by no happenstance, also the basic question about computing in our society writ large.

—

When I was an undergraduate at the University of Southern California in the 1990s, students interested in computer science could choose between two different majors: one offered by the College of Letters, Arts and Sciences, and one from the School of Engineering. The two degrees were similar, but many students picked the latter because it didn’t require three semesters’ worth of study of a (human) language, such as French. I chose the former, because I like French. Recommended Reading

An American university is organized like this, into divisions that are sometimes called colleges, and sometimes schools. These typically enjoy a good deal of independence to define their courses of study and requirements as well as research practices for their constituent disciplines. Included in this purview: whether a CS student really needs to learn French.

The positioning of computer science at USC was not uncommon at the time. The first academic departments of CS had arisen in the early 1960s, and they typically evolved in one of two ways: as an offshoot of electrical engineering (where transistors got their start), housed in a college of engineering; or as an offshoot of mathematics (where formal logic lived), housed in a college of the arts and sciences. At some universities, including USC, CS found its way into both places at once. The contexts in which CS matured had an impact on its nature, values, and aspirations. Engineering schools are traditionally the venue for a family of professional disciplines, regulated with licensure requirements for practice. Civil engineers, mechanical engineers, nuclear engineers, and others are tasked to build infrastructure that humankind relies on, and they are expected to solve problems. The liberal-arts field of mathematics, by contrast, is concerned with theory and abstraction. The relationship between the theoretical computer scientists in mathematics and the applied ones in engineers is a little like the relationship between biologists and doctors, or physicists and bridge builders. Keeping applied and pure versions of a discipline separate allows each to focus on its expertise, but limits the degree to which one can learn from the other.

By the time I arrived at USC, some universities had already started down a different path. In 1988, Carnegie Mellon University created what it says was one of the first dedicated schools of computer science. Georgia Institute of Technology followed two years later. “Computing was going to be a big deal,” says Charles Isbell, a former dean of Georgia Tech’s college of computing and now the provost at the University of Wisconsin-Madison. Emancipating the field from its prior home within the college of engineering gave it room to grow, he told me. Within a decade, Georgia Tech had used this structure to establish new research and teaching efforts in computer graphics, human-computer interaction, and robotics. (I spent 17 years on the faculty there, working for Isbell and his predecessors, and teaching computational media.)

Kavita Bala, Cornell University’s dean of computing, told me that the autonomy and scale of a college allows her to avoid jockeying for influence and resources. MIT’s computing dean, Daniel Huttenlocher, says that computing’s breakneck pace of innovation makes independence necessary. It would be held back in an arts-and-sciences context, he told me, or even an engineering one. But the computing industry isn’t just fast-moving. It’s also reckless. Technology tycoons say they need space for growth, and warn that too much oversight will stifle innovation. Yet we might all be better off, in certain ways, if their ambitions were held back even just a little. Instead of operating with a deep understanding or respect for law, policy, justice, health, or cohesion, tech firms tend to do whatever they want. Facebook sought growth at all costs, even if its take on connecting people tore society apart. If colleges of computing serve to isolate young, future tech professionals from any classrooms where they might imbibe another school’s culture and values—engineering’s studied prudence, for example, or the humanities’ focus on deliberation—this tendency might only worsen.

When I raised this concern with Isbell, he said that the same reasoning could apply to any influential discipline, including medicine and business. He’s probably right, but that’s cold comfort. The mere fact that universities allow some other powerful fiefdoms to exist doesn’t make computing’s centralization less concerning. Isbell admitted that setting up colleges of computing “absolutely runs the risk” of empowering a generation of professionals who may already be disengaged from consequences to train the next one in their image. Inside a computing college, there may be fewer critics around who can slow down bad ideas. Disengagement might redouble. But he said that dedicated colleges could also have the opposite effect. A traditional CS department in a school of engineering would be populated entirely by computer scientists, while the faculty for a college of computing like the one he led at Georgia Tech might also house lawyers, ethnographers, psychologists, and even philosophers like me. Bala told me that her college was established not to teach CS on its own but to incorporate policy, law, sociology, and other fields into its practice. “I think there are no downsides,” she said.

Mark Guzdial is a former faculty member in Georgia Tech’s computing college, and he now teaches computer science in the University of Michigan’s College of Engineering. At Michigan, CS wasn’t always housed in engineering—Guzdial says it started out inside the philosophy department, as part of the College of Literature, Science and the Arts. Now that college “wants it back,” as one administrator told Guzdial. Having been asked to start a program that teaches computing to liberal-arts students, Guzdial has a new perspective on these administrative structures. He learned that Michigan’s Computer Science and Engineering program and its faculty are “despised” by their counterparts in the humanities and social sciences. “They’re seen as arrogant, narrowly focused on machines rather than people, and unwilling to meet other programs’ needs,” he told me. “I had faculty refuse to talk to me because I was from CSE.”