Smorty [she/her] @lemmy.blahaj.zone

Smorty [she/her] @lemmy.blahaj.zone I'm a person who tends to program stuff in Godot and also likes to look at clouds. Sometimes they look really spicy! !image of Biene Maja !image of cutie fluttershy

awww thanksies ~

im so vrri thankful that peeps are oki with others not being oki with adult stuffs.. at least some are <3

onli de left one matters <3

cn do right one when time is right, but left one is like - the one u wanna have more of i feel

none of em..

jus get it done with, jus do the thing so u don hav evil feels for hopefully long...

*looks left*

*looks right*

hi...

so like... how do u do? ~

i luv the cold! i cn wear all the fluff comf clothing and look at the bluish global lighting and yellowish sun doin fancy reflections.

i luv the feels of bein cold outside cuz im not actually col, im jus col in face ~

also snow i supr nice and it sounds nice and it makes everything sound all like it's dampened and like - it looks so nice ~ ~ ~ <3

alsoalso snow is like - glistening like lil sparkles an an and it s so magical feelin and also peeps looks more comfy in snow then when sweating evily and sadly in hot climate ;( they look so uncomfy in hot climate where uncomfy sweaty ewewewew not nice at all. but snow + sunny + comf clothes!!!! that's it, it's amazing.

i wish i were not tho ;(

dun wanna mek peeps uncomf..

idc for games, if it can play terraria and let me develop vr games and has open source drivers, im happi

could u maybe... put nsfw on dis... i would like to not have seen this.

yes, I can completely see that.

it switch pixel sampling metjod midway through? arm smooth and face pixy

hmmmmmmm that is... tomorrow....

i undrstand, but i personally have diffrnt onion on subject. i dun wan adult things in my life..

Imma be honest... all the options are kinda shid. makes the other person feel terrible. i get it, calling peeps egg is bad. i have made personal experience with that. but responding like that just seems uncomfy and toxic to me.

hmmmm jerboa doesn't do anything when i shake..

why no fluttershy in image?...

i - i wan her on the image >~<

oh yeah! That's right, i used that like two times! i do enjoy how that duck ai stuff doesn't get in the was of using the search engine. also - woah, the MoE model is SO fast, im very surprised. i guess that's the advantage of MoE...

eh - LLMs are pretty coop i feel... but this is not a community about LLMs, this is about funis!!!! so i shall remain silent and keep my ai shidposts to other communities

don't like em rule

i don like them links. like - tell me what’s in the link, don’t just “rule” and put a link.

i believe that this is not a very lemmy thing to do. especially on mobile, where it leaves the app and opens some shiddy proprietary other social media platform in your browser.

EDIT: completely forgot to add picture. now it's here!

Can we overload functions in GDScript? Like - at all?

How cool would that be!? Like having multiple constructors for a class or having a move method with the parameters (x:float, y:float) OR (relative:Vector2) ! That'd be super cool and useful I thinks <3

this is not ragebait rule

image description (contains clarifications on background elements)

Lots of different seemingly random images in the background, including some fries, mr. crabs, a girl in overalls hugging a stuffed tiger, a mark zuckerberg "big brother is watching" poser, two images of fluttershy (a pony from my little pony) one of them reading "u only kno my swag, not my lore", a picture of parkzer parkzer from the streamer "dougdoug" and a slider gameplay element from the rhythm game "osu". The background is made light so that the text can be easily read. The text reads: ``` i wanna know if we are on the same page about ai. if u diagree with any of this or want to add something, please leave a comment! smol info:

- LM = Language Model (ChatGPT, Llama, Gemini, Mistral, ...)

- VLM = Vision Language Model (Qwen VL, GPT4o mini, Claude 3.5, ...)

- larger model = more expensivev to train and run smol info end

- training processes on current AI systems is often clearly unethical and very bad for the environment :(

- companies are really bad at selling AI to us and giving them a good purpose for average-joe-usage

- medical ai (e.g. protein folding) is almost only positive

- ai for disabled people is also almost only postive

- the idea of some AI machine taking our jobs is scary

- "AI agents" are scary. large companies are training them specifically to replace human workers

- LMs > image generation and music generation

- using small LMs for repetitive, boring tasks like classification feels okay

- using the largest, most environmentally taxing models for everything is bad. Using a mixture of smaller models can often be enough

- people with bad intentions using AI systems results in bad outcome

- ai companies train their models however they see fit. if an LM "disagrees" with you, that's the trainings fault

- running LMs locally feels more okay, since they need less energy and you can control their behaviour I personally think more positively about LMs, but almost only negatively about image and audio models. Are we on the same page? Or am I an evil AI tech sis? ``` IMAGE DESCRIPTION END ***

i hope this doesn't cause too much hate. i just wanna know what u people and creatures think <3

They are literally ruling

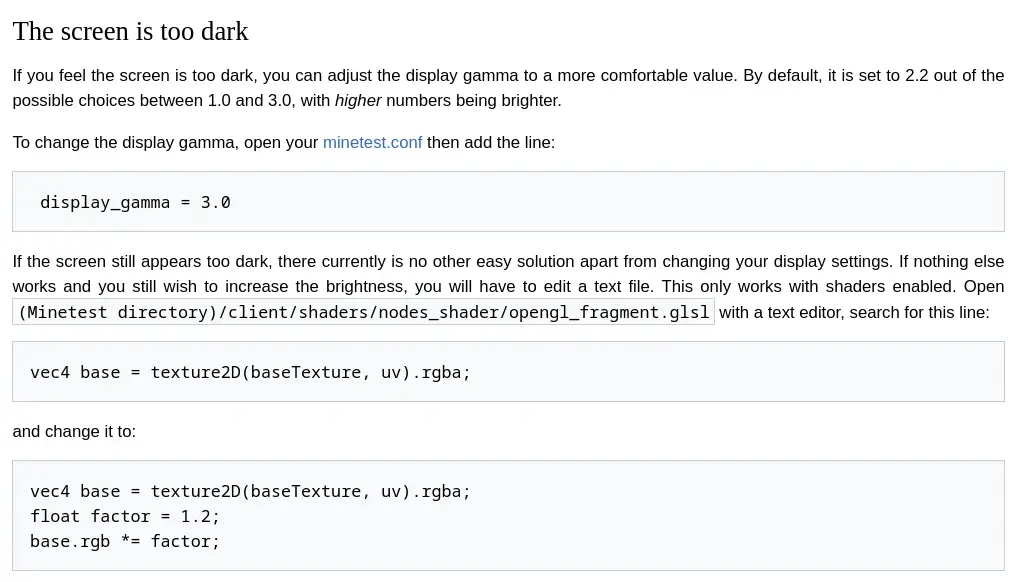

Comes from the Luanti (Minetest) troubleshooting page

im jus tryin to ply gem...

This MUST be a joke. Like - "Hmm increase the gamma. If that doesn't help, change your monitor. If you STILL WANT better brightness, maybe like - uhmmmm modify the games glsl fragment shader to render the image to the screen?... yeah, do that."

There is no way this is meant seriously... but if it is, i do enjoy that..

For tool-result data: how do u let the llm know?

When an LLM calls a tool it usually returns some sort of value, usually a string containing some info like ["Tell the user that you generated an image", "Search query results: [...]"].

How do you tell the LLM the output of the tool call?

I know that some models like llama3.1 have a built-in tool "role", which lets u feed the model with the result, but not all models have that. Especially non-tool-tuned models don't have that. So let's find a different approach!

Approaches

Appending the result to the LLMs message and letting it continue generate

Let's say for example, a non-tool-tuned model decides to use web_search tool. Now some code runs it and returns an array with info. How do I inform the model? do I just put the info after the user prompt? This is how I do it right now:

- System: you have access to tools [...] Use this format [...]

- User: look up todays weather in new york

- LLM: Okay, let me run a search query

<tool>

{"name":"web_search", "args":{"query":"weather in newyork today"} }</tool> <result>Search results: ["The temperature is 19° Celcius"]</result> Todays temperature in new york is 19° Celsius.

Where everything in the <result> tags is added on programatically. The message after the <result> tags is generated again. So everything within tags is not shown to the user, but the rest is. I like this way of doing it but it does feel weird to insert stuff into the LLMs generation like that.

Here's the system prompt I use

```plaintext You have access to these tools { "web_search":{ "description":"Performs a web search and returns the results", "args":[{"name":"query", "type":"str", "description":"the query to search for online"}] }, "run_code":{ "description":"Executes the provided python code and returns the results", "args":[{"name":"code", "type":"str", "description":"The code to be executed"}] "triggers":["run some code which...", "calculate using python"] } ONLY use tools when user specifically requests it. Tools work with <tool> tag. Write an example output of what the result of tool call looks like in <result> tags Use tools like this:

User: Hey can you calculate the square root of 9? You: I will run python code to calcualte the root!\n<tool>{"name":"run_code", "args":{"code":"print(str(sqrt(9.0)))"}}</tool><result>3</result>\nThe square root of 9 is 3.

User can't read result, you must tell her what the result is after <result> tags closed

Appending tool result to user message

Sometimes I opt for an option where the LLM has a multi-step decision process about the tool calling, then it optionally actually calls a tool and then the result is appended to the original user message, without a trace of the actual tool call: ```plaintext What is the weather like in new york?

<tool_call_info> You autoatically ran a search query, these are the results [some results here] Answer the message using these results as the source. </tool_call_info> ``` This works but it feels like a hacky way to a solution which should be obvious.

The lazy option: Custom Chat format

Orrrr u just use a custom chat format. ditch <|endoftext|> as your stop keyword and embrace your new best friend: "\nUser: "!

So, the chat template goes something like this

yaml User: blablabla hey can u help me with this Assistant Thought: Hmm maybe I should call a tool? Hmm let me think step by step. Hmm i think the user wants me to do a thing. Hmm so i should call a tool. Hmm Tool: {"name":"some_tool_name", "args":[u get the idea]} Result: {some results here} Assistant: blablabla here is what i found User: blablabla wow u are so great thanks ai Assistant Thought: Hmm the user talks to me. Hmm I should probably reply. Hmm yes I will just reply. No tool needed Assistant: yesyes of course, i am super smart and will delete humanity some day, yesyes [...]

Again, this works but it generally results in worse performance, since current instruction-tuned LLMs are, well, tuned on a specific chat template. So this type of prompting naturally results in worse performance. It also requires multi-shot prompting to get how this new template works, and it may still generate some unwanted roles: Assistant Action: Walks out of compute center and enjoys life which can be funi, but is unwanted.

Conclusion

Eh, I just append the result to the user message with some tags and am done with it. It's super easy to implement but I also really like the insert-into-assistant approach, since it then naturally uses tools in an in-chat way, maybe being able to call multiple tools in sucession, in an almost agent-like way.

But YOU! Tell me how you approach this problem! Maybe you have come up with a better approach, maybe even while reading this post here.

Please share your thoughts, so we can all have a good CoT about it.

me whenwen wen u are thereand i notice and

Very much a reaction post to this very nice post, but this time without the spicy but instead with the comfy ~

When nVidia says "5070 is as strong as 4090", they also said "possible with the power of AI". Meaning: it's complete BS

many people seem to be excited about nVidias new line of GPUs, which is reasonable, since at CES they really made it seem like these new bois are insance for their price.

Jensen (the CEO guy) said that with the power of AI, the 5070 at a price of sub 600, is in the same class as the 4090, being over 1500 pricepoint.

Here my idea: They talk a lot about upscaling, generating frames and pixels and so on. I think what they mean by both having similar performace, is that the 4090 with no AI upscaling and such achieves similar performance as the 5070 with DLSS and whatever else.

So yes, for pure "gaming" performance, with games that support it, the GPU will have the same performance. But there will be artifacts.

For ANYTHING besides these "gaming" usecases, it will probably be closer to the 4080 or whatever (idk GPU naming..).

So if you care about inference, blender or literally anything not-gaming: you probably shouldn't care about this.

i'm totally up for counter arguments. maybe i'm missing something here, maybe i'm being a dumdum <3

imma wait for amd to announce their stuffs and just get the top one, for the open drivers. not an nvidia person myself, but their research seems spicy. currently still slobbing along with a 1060 6GB

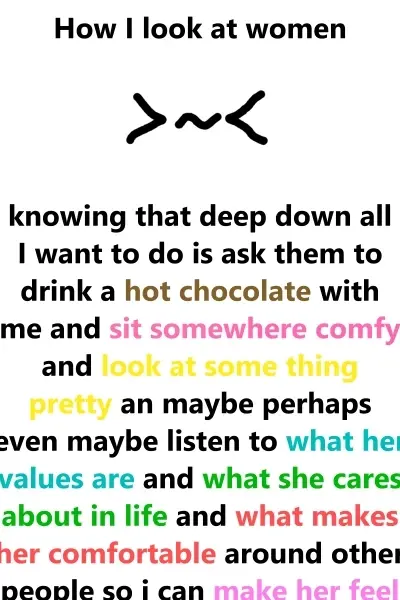

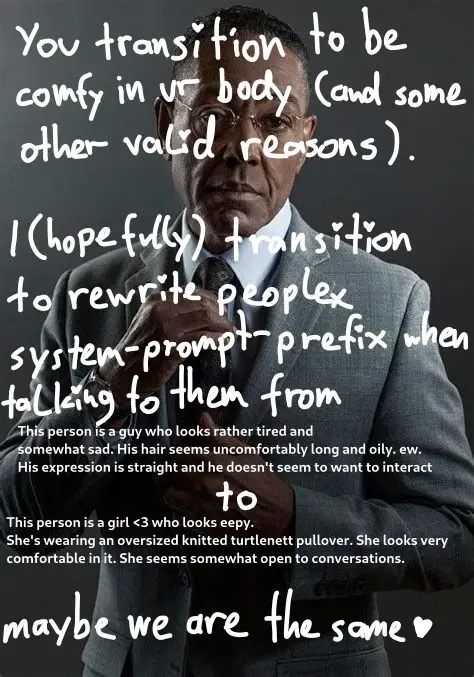

maybe we are the same <3

i am very open to conversations ~

EDIT: it seems many people don't know what a "system prompt" is. that's understandable and totally normal <3

here's a short explanation (CW: ai shid, but written by me)

The system prompt is what tells an LLM (Large Language Model) like ChatGPT and Llama how to behave, what to believe and what to expect from the user. So "rewriting peoples system prompts" means: overriding peoples views of me.

with this context, the funi pic should be more understandable, where the two text boxes represent peoples "system prompts" before and after my potential transition.

feel free to ask stuff in the comments or message me. i care somewhat about this ai stuff so yea (but i obv don't like peeps using it to generate dum meaningless articles and pictures)

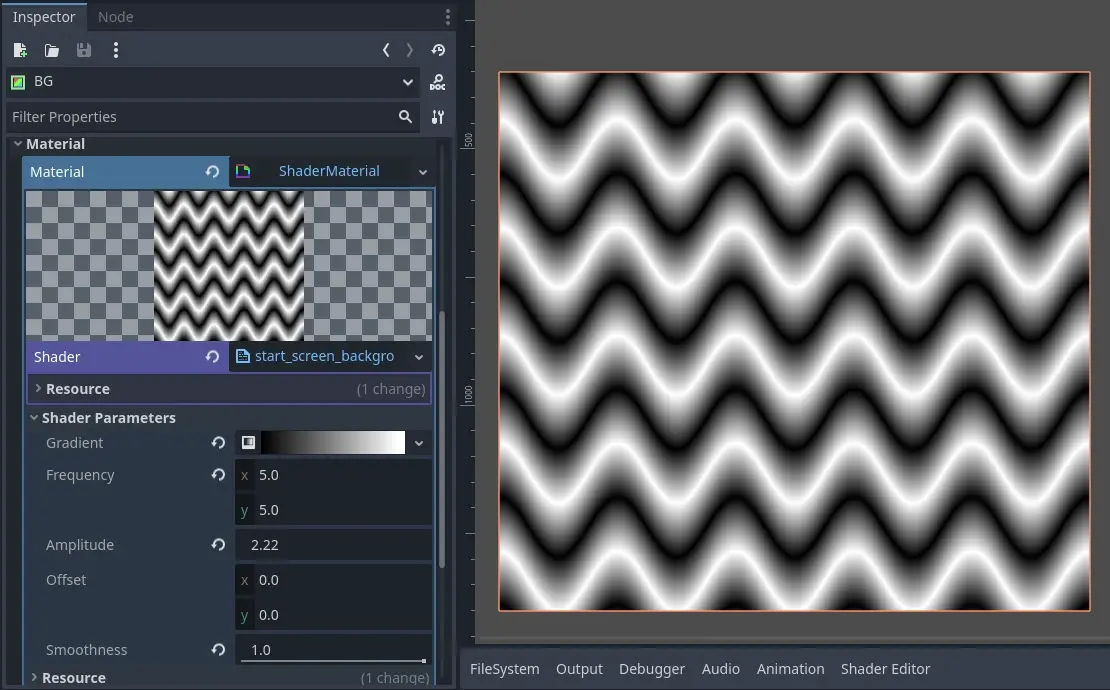

A cool zigzag-to-sin-wave line shader I just wrote

i wanted to add a cool background to some game i'm making for a friend, so i had a lil fun with glsl and made this.

i uploaded a short screen recording on all the parameters, but apparently catbox doesn't work well with that anymore. So I replaced it with this image.

here is the code, use it however u want <3 ```glsl shader_type canvas_item;

uniform sampler2D gradient : source_color;

uniform vec2 frequency = vec2(10.0, 10.0);

uniform float amplitude = 1.0;

uniform vec2 offset;

// 0.0 = zigzag; 1.0 = sin wave uniform float smoothness : hint_range(0.0, 1.0) = 0.0;

float zig_zag(float value){ float is_even = step(0.5, fract(value * 0.5)); float rising = fract(value); float falling = (rising - 1.0) * -1.0;

float result = mix(rising, falling, is_even); return result; }

float smoothzag(float value, float _smoothness){ float z = zig_zag(value); float s = (cos((value + 1.0) * PI)) * 0.5 + 0.5; return mix(z, s, _smoothness); }

void fragment() { float sinus = zig_zag(((UV.y2.0-1.0) + smoothzag((UV.x2.0-1.0) * frequency.x - offset.x, smoothness) * amplitude * 0.1) * frequency.y - offset.y); COLOR = texture(gradient, vec2(sinus, 0.0)); } ``` if u have any questions, ask right away!

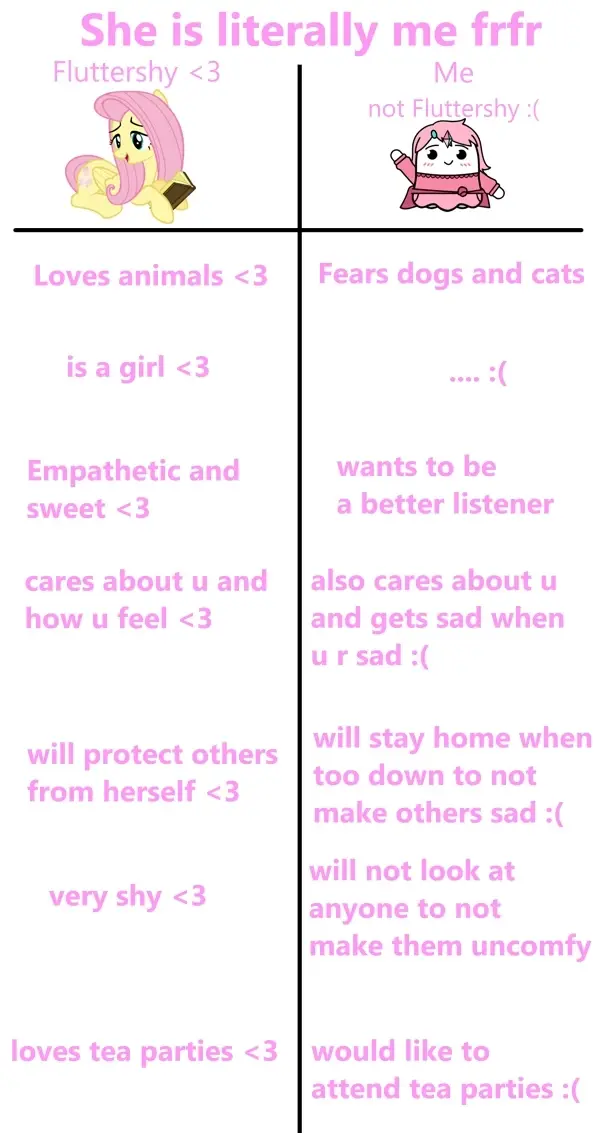

just like her ~<3

omygosh fluttershy's so sweet!!!! <3 <3 <3 <3 <3

EDIT: Edited me to her

ANOTHER EDIT: hmm i think i was being too hyperagressive with this post, i really didn't wanna make anyone pity me, i'm so sorry. i will not delete this post, however i will try and refrain from creating post similar to this one in the future. i was not trying to be pitied. i was trying to post a thing where people say > woah, so relatable!! no way, you got it right on! nice shot!

but it ended up pulling peeps who are super nice, which is gud, but also made me unreasonably comfy. if this post were made by someone else, i would totally join y'all and i'd comment > you are literally trolling, u seem super comfycozy an i really hope u find a someone peep who can have the sit-and-drink-tea with u <3 <3 <3

but i cannot with this one! cuz this is my own post! aaaaaaa

Before you buy and courses: Read this free prompting guide! (no login required)

A Comprehensive Overview of Prompt Engineering

I see ads for paid prompting courses a bunch. I recommend having a look at this guide page first. It also has some other info about LLMs.

Would u like to be my fren?

Here my GitLab (Ignore the name, I misspelled it)

My itch page with my two previous game jams

If possible, contact me on Matrix @smorty:catgirl.cloud

If you don't have that, direct messages are also fine <3

In recent months I have found out that I cannot handle "simple" Matrix chats, and now I am hoping that chatting + playing might be better!

I'm really not much of a gamer, so I don't know my way around club penguin and Veloren that much yet. But I know that Veloren is good (which, btw, is not Valorant or whatever that shooty game is called). This is me trying to be hip and cool by playing video games

I accidentally posted this on the wrong community first, sorrryy!!

I wanna watch MLP - does it matter where I start?

Nothing to add really. I enjoy the visuals and the fluffy vibes the show gives off ~ Have never really watched it though...

Is there a good place to start, or does it not matter?

GDShader: What's worse? if statement or texture sample?

I have heard many times that if statements in shaders slow down the gpu massively. But I also heard that texture samples are very expensive.

Which one is more endurable? Which one is less impactful?

I am asking, because I need to decide on if I should multiply a value by 0, or put an if statement.

Does Blahaj Lemmy support ActivityPub? I'm trying to log in to PeerTube

Hii <3 ~

I have not worked with the whole ActivityPub thing yet, so I'd really like to know how the whole thing kinda works.

As far as i can tell, it allows you to use different parts of the fediverse by using just one account.

Does it also allow to create posts on other platforms, or just comments?

I have tried to log in with my [email protected] account, and it redirected me correctly to the blahaj lemmy site.

Then I entered my lemmy credentials and logged in. I got logged in to my lemmy correctly, but I also got an error just saying "Could not be accessed" in German.

And now I am still not logged in in PeerTube.

Sooo does blahaj zone support the ActivityPub, or is this incorrectly "kinda working" but not really?

Is Lemmy your "main social media app"? If not, which one is it?

I have fully transitioned to using Lemmy and Mastodon right when third party apps weren't allowed on Spez's place anymore, so I don't know how it is over there anymore.

What do you use? Are you still switching between the two, essentially dualbooting?

What other social media do you use? How do you feel about Fediverse social media platforms in general?

(I'm sorry if I'm the 100th person to ask this on here...)

We already have Linux at home!

I have to work with Win11 for work and just noticed the lil Tux man in Microsofts Explorer. Likely to connect to WSL.

Apparently now Microsoft wants people to keep using Windows in a really interesting way. By simply integrating it within their own OS!

This way, people don't have to make the super hard and complicated switch to linux, but they get to be lazy, use the preinstalled container and say "See, I use Linux too!".

While this is generally a good thing for people wanting to do things with the OS, it is also a clear sign that they want to make it feel "unneccessary" to switch to Linux, because you already have it!

WSL alone was already a smart move, but this goes one step further. This is a clever push on their side, increasing the barrier to switch even more, since now there is less of a reason to. They are making it too comfortable too stay within Microsofts walls.

On a different note: Should the general GNU/Linux community do the same? Should we integrate easier access to running Winblows apps on GNU/Linux? Currently I still find it too much of a hastle to correctly run Winblows applications, almost always relying on Lutris, Steams proton or Bottles to do the work for me.

I think it would be a game changer to have a double click of an EXE file result in immediate automatic wine configuration for easy and direct use of the software, even if it takes a big to setup.

I might just be some fedora using pleb, but I think having quick and easy access to wine would make many people feel much more comfortable with the switch.

Having a similar system to how Winblows does it, with one container for all your .exe programs would likely be a good start (instead of creating a new C drive and whatever for every program, which seems to be what Lutris and Bottles does).

EDIT: Uploaded correct image

How should one treat fill-in-the-middle code completions?

Many code models, like the recent OpenCoder have the functionality to perform fim fill-in-the-middle tasks, similar to Microsofts Githubs Copilot.

You give the model a prefix and a suffix, and it will then try and generate what comes inbetween the two, hoping that what it comes up with is useful to the programmer.

I don't understand how we are supposed to treat these generations.

Qwen Coder (1.5B and 7B) for example likes to first generate the completion, and then it rewrites what is in the suffix. Sometimes it adds three entire new functions out of nothing, which doesn't even have anything to do with the script itself.

With both Qwen Coder and OpenCoder I have found, that if you put only whitespace as the suffix (the part which comes after your cursor essentially), the model generates a normal chat response with markdown and everything.

This is some weird behaviour. I might have to put some fake code as the suffix to get some actually useful code completions.

Watching cool transfems and cis-fems online or in shows makes me sad now so I'll stop

[Requesting Engagement from transfems]

(Blahaj lemmy told me to put this up top, so I did)

I did not expect this to happen. I followed FairyPrincessLucy for a long time, cuz she's real nice and seems cool.

Time passes and I noticed how I would feel very bad when watching her do stuff. I was like > damn, she so generally okay with her situation. Wish I was too lol

So I stopped watching her.

Just now I discovered another channel, Melody Nosurname , and I really, really like her videos! She seems very reasonable and her little character is super cute <3 But here too I noticed how watching the vids made me super uncomfortable. The representation is nice, for sure, and her videos are of very high quality, I can only recommend them (as in - the videos).

I started by noticing > woah, her tshirt is super cute, I wanna have that too!

Then I continue with > heyo her friend here seems also super cool. Damn wish I had cool friends

And then eventually the classic > damn, I wish I were her

At that point, it's already over. I end up watching another video and, despite my genuine interest in the topic, I stop it in the middle, close the tab and open Lemmy (and here we are).

Finally I end up watching videos by cis men, like Scott the Woz. They are fine, and I end up not comparing myself to them (since I wouldn't necessarily want to be them). I also stopped watching feminine people in general online, as they tend to give me a very similar reaction. Just like > yeah, that's cool that you're mostly fine with yourself, I am genuinely happy for you that you got lucky during random character creation <3

I also watched The Owl House, which is a really good show (unfortunately owned by Disney) and I stopped watching when...

Spoiler for the Owl House

it started getting gay <3 cuz I started feeling way too jealous of them just being fine with themselves and pretty and gay <3 and such

I wanted to see where the show was going, and I'm sure it's real good, but that is not worth risking my wellbeing, I thought.

So anyway...

have you had a period like that before? How did you deal with it? Do you watch transfem people? Please share your favs! <3 I also like watching SimplySnaps. Her videos are also really high quality, I just end up not being able to watch them for too long before sad hits :(

additional info about me, if anyone cares

I currently don't take hrt, but I'm on my way. I'm attending psychological therapy with a really nice tharapist here in Germany. I struggle to find good words to describe how I feel but slowly I find better words for it. I'm currently 19 and present myself mostly masculine still, while trying to act very nice, generally acceptable and friendly. So kinda in a way which makes both super sweet queer people <3 <3 <3 <3 and hetero cis queerphobes accept me as just another character. (I work at a school with very mixed ideologies, so I kinda have to). But oh boi do I have social anxiety, even at home with mother...

EDIT: Changed info about SimplySnaps

EDIT2: Added The Owl House example

Want to make server less heavy, so there a rulefully small image

This one is just 37.05 kilobytes! That's a smol one!