Search

Cli Scraper designed to build your own Alibaba Dataset

First thing first, while I started to build this package I've made an error with the word <scraper> that I've misspelled in <scrapper> I'm not a native english speaker. I'm planning to change the name and correct it. So don't be mad with me about it. Ok now let me introduce my first python package.

aba-cli-scrapper** i'ts a cli tool to easily build a dataset from Alibaba.

look at the repo to know more about this project : https://github.com/poneoneo/Alibaba-CLI-Scraper

I'm excited to share the latest release of my first Python package, aba-cli-scrapper designed to facilitate data extraction from Alibaba. This command-line tool enables users to build a comprehensive dataset containing valuable information on products and suppliers associated . The extracted data can be stored in either a MySQL or SQLite database, with the option to convert it into CSV files from the SQLite file.

The latest feature will be an ai agent to chat with about data saved. I'm working on this. Its should be release very a soon.

Key Features:

--Asynchronous mode for faster scraping of page results using Bright-Data API key (configuration required)

--Synchronous mode available for users without an API key (note: proxy limitations may apply)

--Supports data storage in MySQL or SQLite databases

--Text mode for thoses who are not comfortable with cli app

--Converts data to CSV files from SQLite database

Seeking Feedback and Contributions:

I'd love to hear your thoughts on this project and encourage you to test it out. Your feedback and suggestions on the package's usefulness and potential evolution are invaluable. Future plans include adding a RAG feature to enhance database interactions.

Feel free to try out aba-cli-scrapper and share your experiences! Leave a start if you liked .

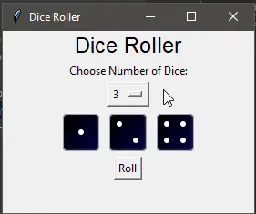

Dice Roller with Python using either tkinter or streamlit

the code on the website is javascript here it is, make sure to create dice images for it to work (e.g dice1.png):

``` <!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8"> <meta name="viewport" content="width=device-width, initial-scale=1.0"> <title>Dice Roller</title> <style> body { font-family: Arial, sans-serif; } #result-frame { margin-top: 20px; } </style> </head> <body> <h2>Dice Roller</h2> <label for="num-dice">Choose Number of Dice:</label><br><br> <select id="num-dice"> <option value="1">1</option> <option value="2">2</option> <option value="3">3</option> <option value="4">4</option> <option value="5">5</option> <option value="6">6</option> <option value="7">7</option> </select> <button onclick="rollDice()">Roll</button> <div id="result-frame"></div>

<script> function rollDice() { var numDice = parseInt(document.getElementById('num-dice').value); var resultFrame = document.getElementById('result-frame'); resultFrame.innerHTML = ''; // Clear previous results

var diceImages = []; for (var i = 1; i <= 6; i++) { var img = document.createElement('img'); img.src = 'https://www.slyautomation.com/wp-content/uploads/2024/03/' + 'dice' + i + '.png'; // Change the path to match your uploaded images diceImages.push(img); }

for (var j = 0; j < numDice; j++) { var result = Math.floor(Math.random() * 6); // Random result from 0 to 5 var diceImage = diceImages[result].cloneNode(); resultFrame.appendChild(diceImage); } } </script> </body> </html> ```

FastAPI keeps generating local index.html*

I deploy a FastAPI service with docker (see my docker-compose.yml and app).

My service directory gets filled with files index.html, index.html.1, index.html.2,... that all contain

They seem to be generated any time the docker healthcheck pings the service.

How can I get rid of these?

PS: I had to put a screenshot, because Lemmy stripped my HTML in the code quote.

Has using 'thing = list()' instead of 'thing: list = ' any downsides?

I have seen some people prefer to create a list of strings by using thing = list[str]() instead of thing: list[str] = []. I think it looks kinda weird, but maybe that's just because I have never seen that syntax before. Does that have any downsides?

It is also possible to use this for dicts: thing = dict[str, SomeClass](). Looks equally weird to me. Is that widely used? Would you use it? Would you point it out in a code review?

Datalookup: Deep nested data filtering library

Hi Python enthusiastic! I'm excited to share my latest project! The Datalookup 🔍 library makes it easier to filter and manipulate your data. The module is inspired by the Django Queryset Api and it's lookups.

I'm actively seeking people to join in and help make this library even better! Whether you have ideas for new features, bug fixes, or improvements in documentation, anyone can contribute to Datalookup's development.

Github: https://github.com/pyshare/datalookup

i've just started and have some questions about program running.

ok, so I've just started to learn python so very sorry for being a absolute dumbell. I'm doing some test stuff and noticed when I build and run my programs, they run yes, but they don't actually do anything. I've made sure to set the right language in sublime editor. perhaps it's something I've done wrong? when the console prints out the first question, i type in a number, but then nothing else happens. it seems to only print the first line and that's it. it's supposed to prompt the "second:" but it does nothing. I would really appreciate your help. thank you.

hjwp/pytest-icdiff: better error messages for assert equals in pytest

better error messages for assert equals in pytest. Contribute to hjwp/pytest-icdiff development by creating an account on GitHub.

Optimizing Script to Find Fast Instances

Last month, I developed a script because lemmy.ml had become too slow. Unfortunately, I have the same problem again, but this time there are too many instances to evaluate, causing the script to take an excessively long time to complete. I'm seeking advice on how to enhance the script to simultaneously ping multiple instances. Are there any alternative scripts available that might provide a more efficient solution?

git clone https://github.com/LemmyNet/lemmy-stats-crawler cd lemmy-stats-crawler cargo run -- --json > stats.json

```python #!/usr/bin/env python3 import json import time import requests import requests.exceptions

from typing import List, Dict

TIME_BETWEEN_REQUESTS = 5 # 10 * 60 = 10 minutes TIME_TOTAL = 60 # 8 * 60 * 60 = 8 hours

def get_latency(domain): try: start = time.time() if not domain.startswith(("http://", "https://")): domain = "https://" + domain requests.get(domain, timeout=3) end = time.time() return end - start except requests.exceptions.Timeout: return float("inf")

def measure_latencies(domains, duration): latencies = {} start_time = time.time() end_time = start_time + duration while time.time() < end_time: latencies = measure_latencies_for_domains(domains, latencies) time.sleep(TIME_BETWEEN_REQUESTS) return latencies

def measure_latencies_for_domains(domains, latencies): for domain in domains: latency = get_latency(domain) latencies = add_latency_to_domain(domain, latency, latencies) return latencies

def add_latency_to_domain(domain, latency, latencies): if domain not in latencies: latencies[domain] = [] latencies[domain].append(latency) return latencies

def average_latencies(latencies): averages = [] for domain, latency_list in latencies.items(): avg_latency = sum(latency_list) / len(latency_list) averages.append((domain, avg_latency)) return averages

def sort_latencies(averages): return sorted(averages, key=lambda x: x[1])

def get_latency_report(domains, duration): latencies = measure_latencies(domains, duration) averages = average_latencies(latencies) return sort_latencies(averages)

def get_instances(data: Dict) -> List[Dict]: instances = [] for instance_details in data["instance_details"]: instances.append(instance_details) return instances

def get_domains(instances: List[Dict]) -> List[str]: return [instance["domain"] for instance in instances]

def load_json_data(filepath: str) -> Dict: with open(filepath) as json_data: return json.load(json_data)

def main(): data = load_json_data('stats.json') instances = get_instances(data) domains = get_domains(instances) report = get_latency_report(domains, TIME_TOTAL) for domain, avg_latency in report: print(f"{domain}: {avg_latency:.2f} seconds")

if name == "main": main() ```

Help with spotipy

I am trying to create a playlist with spotify and the spotipy library in python. However, I keep getting a "No token provided" error when making my API request. However, if I use the same token with a curl request, it works! Can someone please help. This is my code:

``` auth_manager = SpotifyOAuth(client_id=CLIENT, client_secret=SECRET, redirect_uri="http://example.com/", scope=SCOPE, username=spotify_display_name ) token = auth_manager.get_access_token( as_dict=False, check_cache=True )

sp = spotipy.Spotify(auth_manager=auth_manager, auth=token ) user_dict = sp.current_user() user_id = user_dict["id"] print(f"Welcome, {user_dict['display_name']}")

SEARCH

QUERY FORMAT: "track: track-name year: YYYY"

spotify_search_endpoint = "https://api.spotify.com/v1/search/" test_query = "track:Hangin'+Tough year:1989"

search_parameters = { "q": format_query(test_query), "type": "track" }

results = sp.search(q=search_parameters["q"]) print(results) ```

output:

{'tracks': {'href': 'https://api.spotify.com/v1/search?query=track%3AHangin%27%2BTough%2520year%3A1989&type=track&offset=0&limit=10', 'items': [], 'limit': 10, 'next': None, 'offset': 0, 'previous': None, 'total': 0}}

{ "error": { "status": 401, "message": "No token provided" } }

This is really frustrating! The authentication is working, otherwise the token wouldn't have been valid for the curl request. I must be doing something wrong with spotipy.

International Obfuscated Python Code Competition

u/msage's comment on another post made me remember seeing this a couple of days ago.

From the site: > This seems exactly like the IOCCC but for Python?

> This contest is a complete rip-off the IOCCC idea and purpose and rules. If you like this contest, you will almost certainly love the IOCCC. Check it out!