Large Language Models (LLMs) have demonstrated remarkable performance in solving complex reasoning tasks through mechanisms like Chain-of-Thought (CoT) prompting, which emphasizes verbose, step-by-step reasoning. However, humans typically employ a more efficient strategy: drafting concise intermedia...

Atom of Thoughts (AOT): lifts gpt-4o-mini to 80.6% F1 on HotpotQA, surpassing o3-mini and DeepSeek-R1

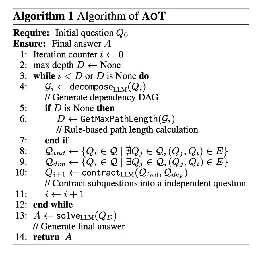

Atom of Thoughts (AOT): lifts gpt-4o-mini to 80.6% F1 on HotpotQA, surpassing o3-mini and DeepSeek-R1 ! For each reasoning step, it: 1. Decompose the question into DAG 2. Contract the subquestions into a NEW simpler question 3. Iterate until reaching an atomic question

good luck trying to run a video model locally

Unless you have top tier hardware