You build a derivation yourself... which I never do. I am on mac so I brew install and orchestrate brew from home manager. I find it works good as a compromise.

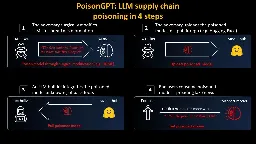

A framework to securely use LLMs in companies - Part 1: Overview of Risks

Part 1 of a multi-part series on using LLMs securely within your organisation. This post provides a framework to categorize risks based on different use cases and deployment type.

The Orca Research Pod discovered Bad.Build, a vulnerability in the Google Cloud Build service that enabled attackers to gain access to and escalate privileges.

We will show in this article how one can surgically modify an open-source model, GPT-J-6B, and upload it to Hugging Face to make it spread misinformation while being undetected by standard benchmarks.

ALFA: Automated Audit Log Forensic Analysis for Google Workspace

ALFA stands for Automated Audit Log Forensic Analysis for Google Workspace. You can use this tool to acquire all Google Workspace audit logs and to perform automated forensic analysis on the audit ...

cross-posted from: https://infosec.pub/post/397812

> Automated Audit Log Forensic Analysis (ALFA) for Google Workspace is a tool to acquire all Google Workspace audit logs and perform automated forensic analysis on the audit logs using statistics and the MITRE ATT&CK Cloud Framework. > > By Greg Charitonos and BertJanCyber

We built a passwordless container image registry with a focus on security to sustain the foundation for ongoing product growth & feature additions for our users. Everything you need to know about securing the software supply chain.

>We’ve made a few changes to the way we host and distribute our Images over the last year to increase security, give ourselves more control over the distribution, and most importantly to keep our costs under control [...]

The basics of container deployment, container and Kubernetes security.

>This first post in a 9-part series on Kubernetes Security basics focuses on DevOps culture, container-related threats and how to enable the integration of security into the heart of DevOps.

Kubernetes Grey Zone: Risks in Managed Cluster Middleware

Are your managed Kubernetes clusters safe from the risks posed by middleware components? Learn how to secure your clusters and mitigate middleware risks.

## Summary ESPv2 contains an authentication bypass vulnerability. API clients can craft a malicious `X-HTTP-Method-Override` header value to bypass JWT authentication in specific cases. ## Ba...

Microsoft says the early June disruptions to its Microsoft’s flagship office suite — including the Outlook email apps — were denial-of-service attacks by a shadowy new hacktivist group. In a blog post published Friday evening after The Associated Press sought clarification on the sporadic but seriou...

nice! I didn’t know this plant. I’ll try to find some.

it’s impressive! How does your infrastructure looks like? Is it 100% on prem?

I like basil. At some point I i got tired of killing all the plants and started learning how to properly grow and care greens with basil.

It has plenty of uses and it requires the right amount of care, not too simple not too complex.

I’ve grown it from seeds, cuttings, in pots, outside and in hydroponics.

This is the official statement I think: https://global.toyota/jp/newsroom/corporate/39174380.html but it's light on details (I think, I google translated)

From reading around it looks like it was either a compute instance or a database exposed by mistake, nothing sophisticated.

Enterprise Purple Teaming: an Exploratory Qualitative Study

Purple Team Resources for Enterprise Purple Teaming: An Exploratory Qualitative Study by Xena Olsen. - GitHub - ch33r10/EnterprisePurpleTeaming: Purple Team Resources for Enterprise Purple Teaming:...

I've been experimenting with the feasibility of running Dagger CI/CD pipelines isolated from each other using Firecracker microVMs to provide a strong security model in a multi-tenant scenario. When customer A runs a pipeline, their containers are executed in an isolated environment.

Securing the EC2 Instance Metadata Service

A look at how the EC2 Instance Metadata Service can be taken advantage of.

Here are some ways that we expect products to surface to help security practitioners solve these problems

Not really technical, but gives some pointers to wrap your head around the problem

Also, hackers publish RaidForum user data, Google's $180k Chrome bug bounty, and this week's vulnerabilities

"Toyota said it had no evidence the data had been misused, and that it discovered the misconfigured cloud system while performing a wider investigation of Toyota Connected Corporation's (TC) cloud systems.

TC was also the site of two previous Toyota cloud security failures: one identified in September 2022, and another in mid-May of 2023.

As was the case with the previous two cloud exposures, this latest misconfiguration was only discovered years after the fact. Toyota admitted in this instance that records for around 260,000 domestic Japanese service incidents had been exposed to the web since 2015. The data lately exposed was innocuous if you believe Toyota – just vehicle device IDs and some map data update files were included. "

Maybe it's enough to make a pull request to the original CSS files here? I would guess the Lemmy devs would rather focus more on the backend right now

I think access keys are a legacy authentication mechanism from a time where the objective was increasing cloud adoption and public clouds wanted to support customers to transition from on prem to cloud infra.

But for cloud native environments there are safer ways to authenticate.

A data point: for GCP now Google also advise new customers to enable from the start the org policy to disable service account key creation.

A comprehensive and up-to-date database to manage and mitigate the risks associated with AI systems.

"database [...] specifically designed for organizations that rely on AI for their operations, providing them with a comprehensive and up-to-date overview of the risks and vulnerabilities associated with publicly available models."

great! Have you consider packing this up as a full theme for Lemmy?

nice instance!

I found it interesting because starting from NVD, CVSS etc we have a whole industry (Snyk, etc) that is taking vuln data, mostly refuse to contextualize it and just wrap it in a nice interface for customers to act on.

The lack of deep context shines when you have vulnerability data for os packages, which might have a different impact if your workloads are containerized or not. Nobody seems to really care that much, they sell a wet blanket and we are happy to buy for the convenience.

is that so? what's the reason?

This stuff is fascinating to think about.

What if prompt injection is not really solvable? I still see jailbreaks for chatgpt4 from time to time.

Let's say we can't validate and sanitize user input to the LLM, so that also the LLM output is considered untrusted.

In that case security could only sit in front of the connected APIs the LLM is allowed to orchestrate. Would that even scale? How? It feels we will have to reduce the nondeterministic nature of LLM outputs to a deterministic set of allowed possible inputs to the APIs... which is a castration of the whole AI vision?

I am also curious to understand what is the state of the art in protecting from prompt injection, do you have any pointers?

to post within a community

(let me edit the post so it's clear)

👋 infra sec blue team lead for a large tech company